Father

Professional

- Messages

- 2,602

- Reaction score

- 808

- Points

- 113

Mobile operators receive a lot of data and metadata, which can be used to learn a lot about the life of a single subscriber. And having understood how this data is processed and how it is stored, you will be able to track the entire chain of information flow from the call to the withdrawal of money. If we talk about the model of an internal intruder, then the possibilities here are even more enormous, because data protection is not at all part of the tasks of pre-billing systems.

First, you need to take into account that subscriber traffic in the telecom operator's network is generated and received from different equipment. This equipment can generate files with records (CDR files, RADIUS logs, text in ASCII) and work with different protocols (NetFlow, SNMP, SOAP). And you need to control all this funny and unfriendly round dance, take data, process it and transfer it further to the billing system in a format that will be previously standardized.

At the same time, subscriber data is running everywhere, access to which it is desirable not to provide to outsiders. How secure is the information in such a system, taking into account all chains? Let's figure it out.

Why do telecom operators need prebilling?

It is believed that subscribers want to receive more and more new and modern types of services, but it is impossible to constantly change equipment for this. Therefore, pre-billing should deal with the implementation of new services and the methods of their provision - this is its first task. The second is traffic analysis, checking its correctness, completeness of loading into subscriber billing, preparing data for billing.With the help of pre-billing, various reconciliation and additional data loading are implemented. For example, checking the status of services on equipment and in billing. It happens that the subscriber uses the services despite the fact that he is already blocked in the billing. Or he used the services, but no records of this were received from the equipment. There can be many situations, most of such moments are solved with the help of pre-billing.

I once wrote a term paper on optimizing a company's business processes and calculating ROI. The problem with calculating ROI was not that there was no initial data - I did not understand what "ruler" to measure them with. The same is often the case with pre-billing. You can endlessly tweak and improve the processing, but always at some point, circumstances and data will collapse in such a way that an exception occurs. It is possible to ideally build a system for the operation and monitoring of auxiliary billing and pre-billing systems, but it is impossible to ensure the uninterrupted operation of equipment and data transmission channels.

Therefore, there is a duplicate system that checks the data in billing and data that has gone from pre-billing to billing. Its task is to catch what left the equipment, but for some reason "did not fall on the subscriber." This role of a redundant and controlling pre-billing system is usually played by FMS - Fraud Management System. Of course, its main purpose is not to control pre-billing at all, but to detect fraudulent schemes and, as a result, monitor data losses and discrepancies from equipment and billing data.

In fact, there are a lot of pre-billing use cases. For example, it can be performing a reconciliation between the state of the subscriber on the equipment and in the CRM. Such a diagram might look like the following.

- Using SOAP pre-billing, we receive data from equipment (HSS, VLR, HLR, AUC, EIR).

- Let's convert the original RAW data to the desired format.

- We make a request to related CRM systems (databases, program interfaces).

- We make a data reconciliation.

- We form exception records.

- We make a request to the CRM system for data synchronization.

- As a result, a subscriber who downloads a movie while roaming in South Africa is blocked with a zero balance and does not go into a wild minus.

- Receiving data from equipment.

- Aggregation of data on pre-billing (we are waiting for all the necessary records to be collected according to some condition).

- Sending data to final billing.

- Bottom line - instead of 10 thousand records, we sent one with the aggregating value of the consumed Internet traffic counter. Made just one database query and saved tons of resources, including electricity!

How to pacify the zoo?

To make it clearer how it works and where problems can arise here, let's take the Hewlett-Packard Internet Usage Manager pre-billing system (HP IUM, in the updated version of eIUM) and, using its example, let's see how such software works ...Imagine a large meat grinder into which meat, vegetables, loaves of bread are thrown - whatever is possible. That is, at the entrance there are a variety of products, but at the exit they all take on the same shape. We can change the grate and get a different shape at the exit, but the principle and way of processing our products will remain the same - auger, knife, grate. This is the classic pre-billing scheme: data collection, processing and output. In IUM pre-billing, the links of this chain are called encapsulator, aggregator and datastore.

Here it is necessary to understand that at the input we must have the completeness of the data - a certain minimum amount of information, without which further processing is useless. In the absence of a block or data element, we receive an error or warning that processing is impossible, since operations cannot be performed without this data.

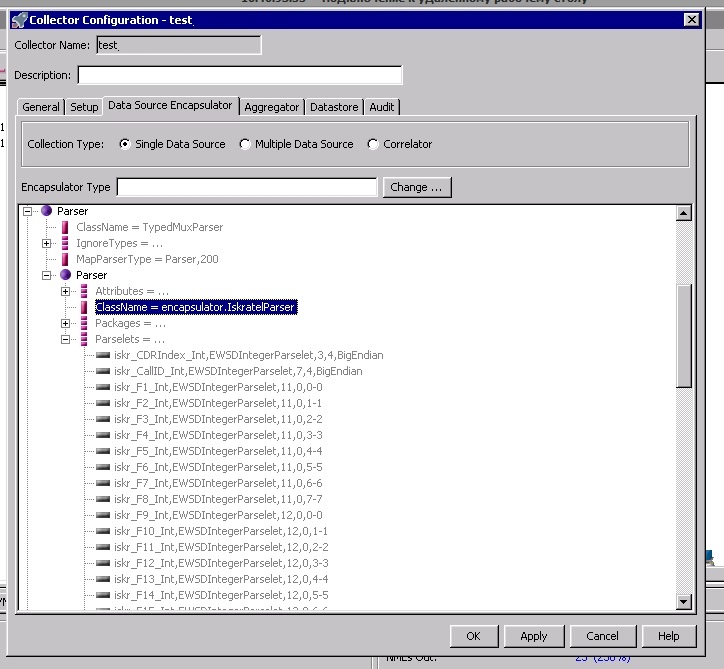

Therefore, it is very important that the equipment generates record files that have a set and type of data strictly defined and established by the manufacturer. Each type of equipment is a separate processor (collector) that works only with its own input data format. For example, you cannot just take and upload a file from CISCO PGW-SGW equipment with Internet traffic of mobile subscribers to the collector that processes the stream from Iskratel Si3000 fixed communication equipment.

If we do this, then at best we will receive an exception during processing, and at worst we will have all the processing of a particular thread, since the collector handler will fall with an error and will wait until we solve the problem with the " broken "from its point of view file. Here you can see that all pre-billing systems, as a rule, critically perceived data that a specific collector handler has not been configured to process.

Initially, the stream of parsed data (RAW) is formed at the level of the encapsulator and already here it can be subjected to transformations and filtering. This is done if it is necessary to make changes with the stream before the aggregation scheme, which should be further applied to the entire data stream (when it passes through various aggregation schemes).

Files (.cdr, .log and others) with records of the activity of subscriber users come both from local sources and from remote ones (FTP, SFTP), options for working with other protocols are also possible. Parses files with a parser using different Java classes.

Since the pre-billing system in normal operation is not designed to store the history of processed files (and there may be hundreds of thousands of them per day), after processing the file at the source is deleted. For various reasons, the file cannot always be deleted correctly. As a result, it happens that records from the file are processed repeatedly or with great delay (when the file was deleted). To prevent such duplicates, there are protection mechanisms: checking for duplicate files or records, checking for a time in records, and so on.

One of the most vulnerable spots here is the criticality to the size of the data. The more we store data (in memory, in databases), the slower we process new data, the more we consume resources and in the end we still reach the limit, after which we are forced to delete old data. Thus, auxiliary databases (MySQL, TimesTen, Oracle, and so on) are usually used to store this metadata. Accordingly, we get another system that affects the work of pre-billing with the ensuing security issues.

What's in the black box?

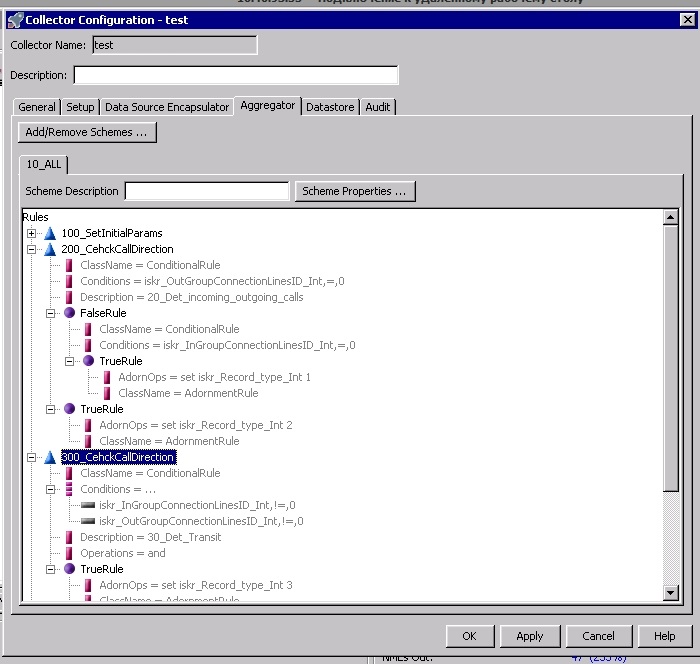

Once upon a time, at the dawn of such systems, languages were used that made it possible to work efficiently with regular expressions - such, for example, was Perl. In fact, almost all prebilling, if you do not take into account the work with external systems, is the rules for parsing and transforming strings. Naturally, there is nothing better to find here than regular expressions. The ever-growing volume of data and the increasing criticality of the time of launching a new service to the market made the use of such systems impossible, since testing and making changes took a long time, and scalability was low.Modern prebilling is a set of modules, usually written in Java, that can be controlled in a graphical interface using standard copy, paste, move, and drag-and-drop operations. The work in this interface is simple and straightforward.

For work, an operating system based on Linux or Unix is mainly used, less often - Windows.

The main problems are usually associated with the testing process or error detection, as data passes through many chains of rules and is enriched with data from other systems. It is not always convenient and understandable to see what happens to them at each stage. Therefore, you have to look for the reason, catching changes in the necessary variables with the help of logs.

The weakness of this system is its complexity and the human factor. Any exception provokes data loss or incorrect formation.

The data is processed sequentially. If we have an error-exception at the input, which does not allow us to correctly receive and process data, the entire input stream gets up, or a portion of incorrect data is discarded. The parsed RAW stream goes to the next stage - aggregation. There can be several aggregation schemes, and they are isolated from each other. As if a single stream of water entering the shower, passing through the grill of the watering can, is divided into different streams - some thick, others very thin.

After aggregation, the data is ready for delivery to the consumer. Delivery can go either directly to the databases, or by writing to a file and sending it further, or simply by writing to the pre-billing storage, where they will lie until it is emptied.

After processing at the first level, the data can be transferred to the second and further. Such a ladder is necessary to increase processing speed and load distribution. In the second stage, another stream can be added to our data stream, mixed, shared, copied, merged, and so on. The end stage is always the delivery of data to the systems that consume it.

Pre-billing does not include (and rightly so!):

- monitor whether input-output data have been received and delivered - this should be done by separate systems;

- encrypt data at any stage.

Privacy

Here we have a complete raskolbas! Let's start with the fact that the task of pre-billing does not include data protection in principle. Differentiation of access to pre-billing is necessary and possible at different levels (management interface, operating system), but if we force him to encrypt data, the complexity and processing time will increase so much that it will be completely unacceptable and unsuitable for billing ...Often, the time from using the service to displaying this fact in the billing should not exceed a few minutes. As a rule, the metadata that is needed to process a particular piece of data is stored in a database (MySQL, Oracle, Solid). The input and output data are almost always located in the directory of a particular collector stream. Therefore, anyone who is authorized to have access to them (for example, the root user).

The pre-billing configuration itself with a set of rules, information about access to databases, FTP, etc. is stored encrypted in the file database. If the username-password for access to the pre-billing is unknown, then it is not so easy to unload the configuration.

Any changes made to the processing logic (rules) are recorded in the pre-billing configuration log file (who changed what, when and what).

Even if within the pre-billing data is transmitted directly through the chains of collector handlers (bypassing uploading to a file), the data is still temporarily stored as a file in the handler directory, and you can access it if you wish.

The data that is processed on pre-billing is depersonalized: it does not contain full name, addresses and passport data. Therefore, even if you get access to this information, you will not find out the personal data of the subscriber from here. But you can catch some info by a specific number, IP or other identifier.

Having access to the pre-billing configuration, you get data for access to all related systems with which it works. As a rule, access to them is limited directly from the server on which the pre-billing is running, but this is not always the case.

If you get to the directories where the handler file data is stored, you can make changes to these files that are waiting to be sent to consumers. Often these are the most common text documents. Then the picture is as follows: the pre-billing data was received and processed, but they did not come to the final system - they disappeared in a "black hole".

And it will be difficult to find out the reason for these losses, since only part of the data is lost. In any case, it will be impossible to emulate the loss with further search for the reasons. You can look at the input and output data, but you won't be able to understand where it went. At the same time, the attacker only has to cover his tracks in the operating system.