Man

Professional

- Messages

- 3,222

- Reaction score

- 1,213

- Points

- 113

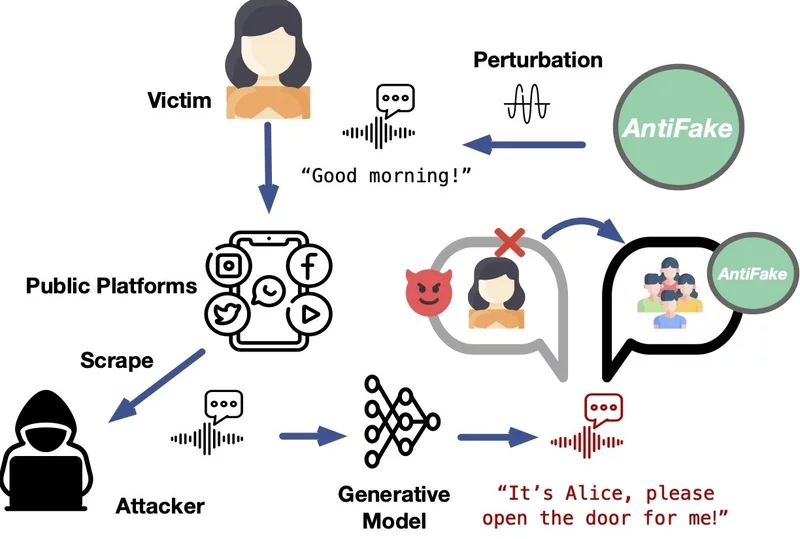

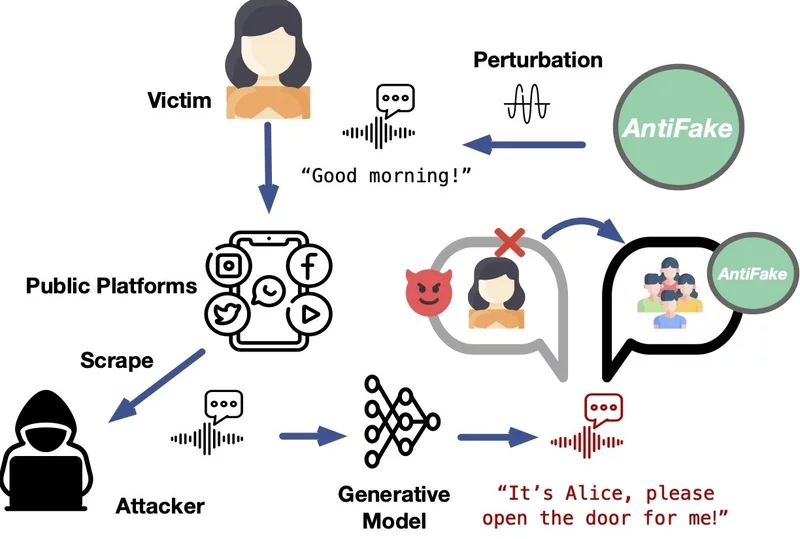

Voice forgery technologies using artificial intelligence are quite a dangerous tool - they are capable of realistically reproducing a human voice even from a short sample. The AntiFake algorithm proposed by an American scientist will be able to prevent the creation of a skillful forgery.

Deepfakes are a dangerous phenomenon, as they can be used to attribute a statement to a famous artist or politician that they never made. There have also been cases where an attacker would call the victim and, in the voice of a friend, ask for an urgent transfer of money due to some emergency. Ning Zhang, an associate professor of computer science and engineering at Washington University in St. Louis, has proposed a technology that significantly complicates the creation of voice deepfakes.

The AntiFake algorithm works by creating conditions that make it much more difficult for an AI system to read key voice characteristics when recording a conversation between a real person. “The tool uses an adversarial AI technique that was originally used by cybercriminals, but now we have turned it against them. We slightly distort the recorded audio signal, creating disturbances just enough so that it sounds the same to a person, but completely different to an AI ,” Mr. Zhang commented on his project.

Image source: wustl.edu

This means that when trying to create a deepfake based on a recording modified in this way, the AI-generated voice will not sound like the human voice in the sample. As tests have shown, the AntiFake algorithm is 95% effective in preventing the synthesis of convincing deepfakes. “What will happen with voice AI technologies in the future, I do not know - new tools and functions are constantly being developed - but I still believe that our strategy of using the enemy's technology against him will remain effective,” the author of the project concluded.

Deepfakes are a dangerous phenomenon, as they can be used to attribute a statement to a famous artist or politician that they never made. There have also been cases where an attacker would call the victim and, in the voice of a friend, ask for an urgent transfer of money due to some emergency. Ning Zhang, an associate professor of computer science and engineering at Washington University in St. Louis, has proposed a technology that significantly complicates the creation of voice deepfakes.

The AntiFake algorithm works by creating conditions that make it much more difficult for an AI system to read key voice characteristics when recording a conversation between a real person. “The tool uses an adversarial AI technique that was originally used by cybercriminals, but now we have turned it against them. We slightly distort the recorded audio signal, creating disturbances just enough so that it sounds the same to a person, but completely different to an AI ,” Mr. Zhang commented on his project.

Image source: wustl.edu

This means that when trying to create a deepfake based on a recording modified in this way, the AI-generated voice will not sound like the human voice in the sample. As tests have shown, the AntiFake algorithm is 95% effective in preventing the synthesis of convincing deepfakes. “What will happen with voice AI technologies in the future, I do not know - new tools and functions are constantly being developed - but I still believe that our strategy of using the enemy's technology against him will remain effective,” the author of the project concluded.