Brother

Professional

- Messages

- 2,590

- Reaction score

- 505

- Points

- 83

A data leak on Hugging Face revealed the source code of the largest projects.

Lasso Security has identified flaws in the Hugging Face platform, which specializes in data for artificial intelligence and machine learning. More than 1,500 API tokens( API tokens), some of which belong to Meta*, Google, and Microsoft, were discovered in the public domain. Tokens provide access to the accounts of 723 organizations.

The greatest danger was represented by tokens with write rights (655 tokens), which could allow attackers to modify files in repositories. Out of the total number of vulnerable organizations, 77 companies are particularly at risk, including Meta, EleutherAI and BigScience Workshop, which implement the LLaMA model, the Pythia mathematical program and the Bloom model, respectively.

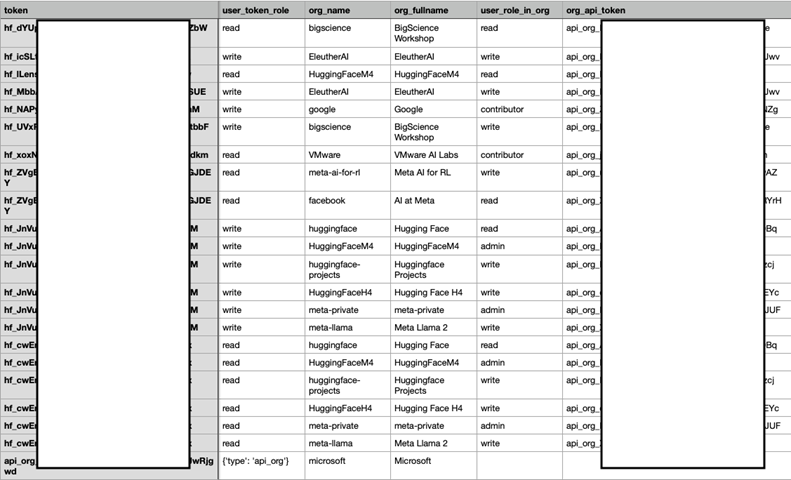

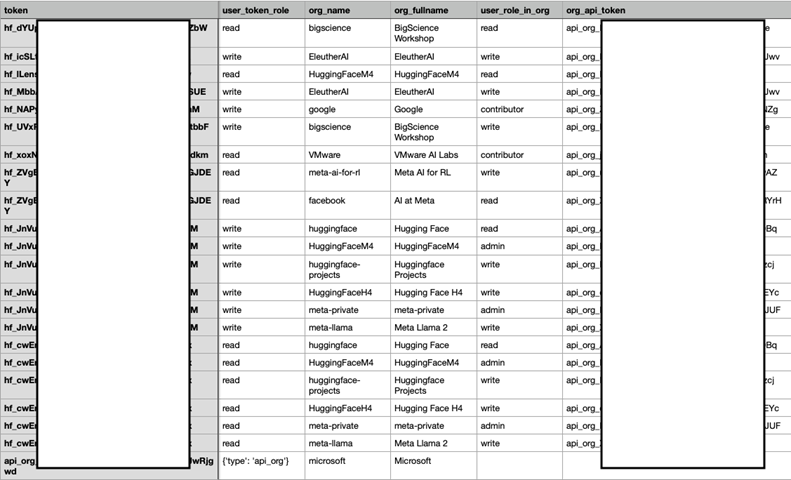

OpeningAPI-tokens of the most "valuable" organizations

After the notification, all affected companies promptly fixed the vulnerabilities, although Meta and BigScience Workshop did not provide official comments. Hugging Face is a key platform for the AI community, storing more than 250,000 datasets and 500,000 AI models, and the potential consequences of leaks could have been devastating. The researchers emphasize that attackers could use tokens to steal data, poison training data, or even completely steal models, which would affect more than a million users.

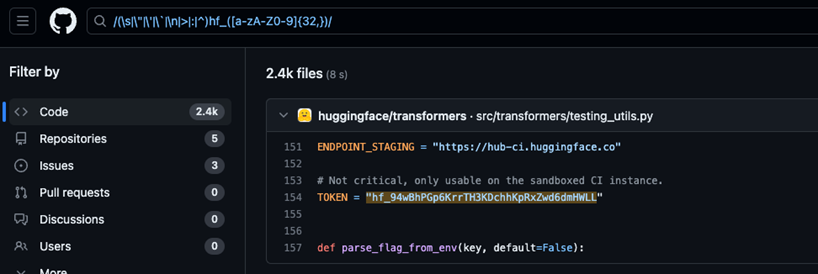

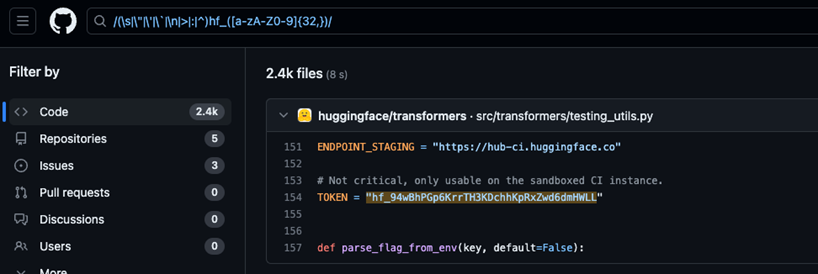

Example of displaying a token in the code

The Lasso Security team that conducted the study was able to access 14 different data sets for modification, each of which has tens of thousands of downloads per month. Such data poisoning attacks are one of the most serious threats to the field of AI and machine learning, and are among the top ten largest risks for LLM according to OWASP.

In addition, the researchers also found vulnerabilities that allow more than 10,000 private models to be stolen, highlighting the importance of the problem in the context of overall AI security. Experts have gained full access to Meta Llama 2, BigScience Workshop and EleutherAI, which opens the door to potential exploitation of these resources by intruders.

During the investigation, it turned out that the leak of API tokens occurred due to storing tokens in variables that were not hidden when the code was published in public repositories. Affected organizations were notified of the vulnerabilities. Meta, Google, Microsoft, and VMware quickly responded by revoking tokens and removing code from their repositories.

Lasso Security has identified flaws in the Hugging Face platform, which specializes in data for artificial intelligence and machine learning. More than 1,500 API tokens( API tokens), some of which belong to Meta*, Google, and Microsoft, were discovered in the public domain. Tokens provide access to the accounts of 723 organizations.

The greatest danger was represented by tokens with write rights (655 tokens), which could allow attackers to modify files in repositories. Out of the total number of vulnerable organizations, 77 companies are particularly at risk, including Meta, EleutherAI and BigScience Workshop, which implement the LLaMA model, the Pythia mathematical program and the Bloom model, respectively.

OpeningAPI-tokens of the most "valuable" organizations

After the notification, all affected companies promptly fixed the vulnerabilities, although Meta and BigScience Workshop did not provide official comments. Hugging Face is a key platform for the AI community, storing more than 250,000 datasets and 500,000 AI models, and the potential consequences of leaks could have been devastating. The researchers emphasize that attackers could use tokens to steal data, poison training data, or even completely steal models, which would affect more than a million users.

Example of displaying a token in the code

The Lasso Security team that conducted the study was able to access 14 different data sets for modification, each of which has tens of thousands of downloads per month. Such data poisoning attacks are one of the most serious threats to the field of AI and machine learning, and are among the top ten largest risks for LLM according to OWASP.

In addition, the researchers also found vulnerabilities that allow more than 10,000 private models to be stolen, highlighting the importance of the problem in the context of overall AI security. Experts have gained full access to Meta Llama 2, BigScience Workshop and EleutherAI, which opens the door to potential exploitation of these resources by intruders.

During the investigation, it turned out that the leak of API tokens occurred due to storing tokens in variables that were not hidden when the code was published in public repositories. Affected organizations were notified of the vulnerabilities. Meta, Google, Microsoft, and VMware quickly responded by revoking tokens and removing code from their repositories.