Tomcat

Professional

- Messages

- 2,689

- Reaction score

- 981

- Points

- 113

A rough plaster copy of the article's author's head

Checking the live presence in the frame - what is the importance of the task

The tasks of user identification and authentication using facial biometrics cannot be reliably solved without determining the live presence (Liveness Detection) of the user in the frame - it is necessary to make sure that the creation of a biometric template occurs precisely according to the data of the person himself, and, for example, not a printed image held up to camera. A variety of attack options are possible, which will be discussed below. All of them are aimed at replacing a living user of the system with his image (without the live presence of the user), thereby “deceiving” the biometric algorithm and achieving the result desired by the attackers, claiming that it was the user who accepted the operation being performed.Methods for detecting live presence

We can divide the main approaches to our problem into several categories:- Explicit construction of a three-dimensional face map.

- Using several frames or a video fragment to build an image of a three-dimensional face map (pseudo-3D).

- Using a video fragment to track movement in the frame (facial expressions, head turns).

- Analysis of a static frame to identify artifacts: moire, glare, printing defects.

Classification of attacks on live presence according to FIDO

The Fast Identity Online Association classifies attacks by difficulty of reproduction for different biometric modalities, and we will consider only those attacks that relate to facial biometrics.Class A includes attacks that use still images: photographs printed on paper, facial images shown on the screens of mobile devices. There are algorithms that check the live presence of one static frame; they track “extraneous” effects on the submitted image: moire (when displayed from the screen of a mobile device), glare, edges of the image contrasting with the background, printing artifacts, and so on.

Such attacks can be easily reproduced using ordinary equipment: printer, paper, smartphone. To carry out an attack, you will need a minimum amount of knowledge about the person the attacker is trying to impersonate: a few photographs of satisfactory quality, which can be found, for example, on social networks, are enough.

Class B includes more “live” attacks, namely those that simulate movement, for example, videos shown from mobile devices. This also includes attacks using masks with cut out fragments: eyes, nose, mouth. By carrying out such attacks, the attacker tries to deceive the algorithms that evaluate movement in the frame: facial expressions in the area around the eyes, head turns, changes in shadows, etc.

On your own, “at home,” you can make a paper mask that partially follows the shape of your face, cutting out the nose and eye areas from it. You can also bend it, giving it a clearly three-dimensional shape. If the print quality is quite high, then such a mask may well “deceive” some algorithms for checking live presence, especially if the attacker reduces its total area as much as possible and makes the transition from the edges of the mask to his face less sharp.

An additional complication for an attacker is the fact that the bending of the mask distorts the proportions of the face printed on it, so it can be very difficult, or even impossible, to pass both authentication and live presence verification. Additionally, you can change the image so that it matches the curvature of the mask, but this is not easy to do because information about the “depth” of the face is required, i.e. additional coordinate.

To carry out Class B attacks , an attacker needs to have high-quality photographs of the face of the user he wants to impersonate, video recordings in which the face occupies a significant part of the frame; such information is more difficult to obtain.

Class C includes attacks such as: 3D printed copies of faces, complex theatrical masks, silicone masks.

Materials for attacks in this class cannot be produced “at home”; special equipment is required: 3D printers or a large number of components for a theater mask. In addition, to make a high-precision three-dimensional copy of a face, complete information about the shape and size of the original face is required.

In other words, in order to make a copy on a printing device, the person whose face is being copied must go through a rather long and detailed scanning procedure. Having a sufficiently large number of high-precision photographs of the face from different angles and video fragments, it is possible to create a similar copy using a computer, however, the final stage of creating a drawing for printing (or another production method) must in any case be performed by a sculptor, because automatic generation of a three-dimensional face or scanning will in any case generate artifacts that will need to be eliminated manually. In other words, today this is a time- and money-consuming procedure.

Methods for constructing volumetric maps of objects

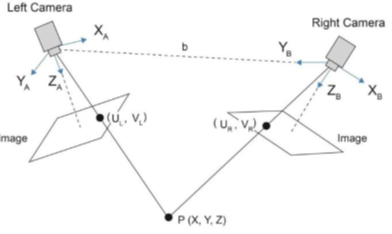

One way to ensure that the user is physically present in front of the device is to check the subject for obvious three-dimensionality. Let's look at ways to build 3D maps:Stereo vision

3D machine stereo vision requires the use of two cameras to obtain a pair of images that differ in perspective. Calibration of such a device occurs through pixel-by-pixel (point-by-point) analysis of images in order to highlight the difference and determine the distance (depth) of individual image fragments to the cameras. A similar principle guides the human brain when perceiving an image. The operation of such a system requires significant computing resources and calibration for each camera installation option. The cost of the equipment depends on the accuracy, for example, installing synchronous camera shutter control increases costs. The distance from which reading occurs is limited for physical reasons; longer distances will require more accurate optical instruments. In addition, this method does not work well when lighting conditions change, since it strongly depends on the reflective properties of the object.

An example of a device using this principle is the Intel Real Sense D415 camera, which is equipped with two cameras operating in the infrared range. Each of the cameras “sees” the image of point P in its plane and assigns coordinates (UL , VL ) and ( UR , VR ) to it, respectively. Knowing them and the distance b, you can calculate the distance to point P , i.e. to a point on the surface of the object, which is required to construct a depth map.

Structured lighting

This method is based on the idea of illuminating an object in a predetermined way, for example, a linear grating with variable brightness is usually used. The depth of the object is estimated based on the analysis of stretching and contracting from the initial illumination structure in reflected light. Since there is no fundamental requirement for the duration of a single frame and shooting time, this technology allows you to prevent blurring of the frame when moving. On the other hand, special lighting requires a special camera, with a stable mechanical connection between the camera itself and the illuminator; any imbalance will require reconfiguring the system. In addition, the structure of reflected light is highly dependent on external sources and thus can only be used indoors.The most convenient option for using structured lighting is to use illumination in the infrared range, invisible to the human eye. This principle works, for example, on the Apple iPhone.

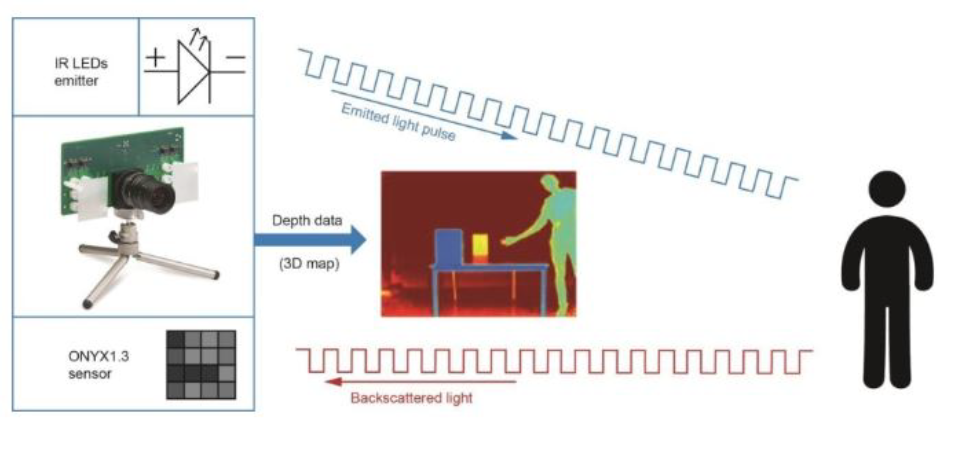

Estimation of light propagation time

This method measures the time it takes light to travel a distance to an object and return back to the camera. Typically, equipment is used in which the camera shutter and emitter are synchronized. Light is emitted in phase with the shutter, and depending on the delay (phase difference) when the reflected light is detected, the distance to the object is calculated. Thus, a pixel-by-pixel (point-by-point) image depth map is created. Due to the small angle of radiation and shooting, the system requires only one-time adjustment. It also works in daylight, but requires very precise synchronization between the shutter and the active light source.

Pseudo-3D: evaluation of video fragments

As we mentioned earlier, it is possible to restore a 3D map of an object from a video fragment, or by sequentially shooting the user’s face from different angles. This method of verification is probably familiar to the reader, but it requires the user to perform additional actions, i.e. is the so-called “cooperative check”.Typically, the test scenario looks like this: the user is asked to turn his head (at a small angle) or move the smartphone camera away/closer to his face. In this way, it is possible to make sure that there is a clearly three-dimensional object in front of the camera, and, for example, not a printed image or the screen of a smartphone from which a photograph is being shown.

2D Image Evaluation

The simplest and at the same time the least reliable way to check a living presence is to analyze ordinary (two-dimensional) images in the visible range.Typically, a neural network that estimates the probability that an image presented to computer vision is a screenshot of a smartphone, a printed image, etc. is trained to detect certain artifacts.

Moire

They are formed when shooting screens that have a periodic structure (pixels). The reader has probably observed similar effects more than once.

Sharp contrast between image and background

The printed image will most likely be placed either on a rectangular piece of paper or will be cropped. One way or another, a sharp transition of colors will be noticeable.

Glare from lighting on paper

In the frame above you can see that the paper is overexposed in the hair area. There would be no such spot on a real face.Using IR

A more reliable way to determine a living presence from a static image is to use infrared cameras, because a significant layer of possible attacks is immediately cut off: ordinary printed images and screens of smartphones (and other devices) are simply not visible in the IR spectrum.

The author of the article is wearing a printed mask, depicting an attack. It is clearly visible that in the IR range the mask is just a sheet of paper without a pattern or color, and the 3D map shows that a regular mask is just a slightly curved sheet of paper.

Checking a specific movement in a frame

The scenario for such a test could be as follows: the user is asked to reproduce a certain movement or gesture in front of the device’s camera: for example, smile, wink, shake his head. Although each of these gestures can be individually reproduced by an attacker, for example a blink can be simulated by sliding a paper eyelid over an eye in a printed image, if the requirement for the gesture varies according to some dependency, such a test may be more or less reliable: e.g. , you can randomly ask the user to nod only in a certain direction, or to smile two or three times in a row.The main disadvantage of this approach is the need to force the user to perform any actions in front of the camera, which, firstly, cannot always be done, and secondly, lengthens the interaction scenario and requires active actions from the user.

"Real" faces

At the moment, the production of “good” ones, i.e. detailed masks replicating a person’s face are very expensive. The title of the article uses an image of a plaster copy of the head printed on a 3D printer. Preparing such an object is difficult: you need to go through the scanning procedure, further refine the drawing in the editor, and finally print it with exact colors.A plaster copy of the author's head is recognized by existing systems for checking living presence as a living person, but does not achieve the required “portrait” resemblance to the original. In other words, such an object looks like a real face, but the face of a different person. The reason for this is precisely that it is very difficult to draw such a complex 3D object as a human head.

Thus, if you use facial recognition as a biometric modality for authentication or identification of a person, then for some scenarios, such as, for example, purchases for significant amounts, authentication in the MFC and other similar ones, it is necessary to use an additional factor (PIN, one-time message, etc.) d.).

However, simple but reliable verification methods that block the most common attacks can be used for such scenarios as payments for small amounts, identification in loyalty programs, passage with public transport and other similar cases.