Man

Professional

- Messages

- 3,222

- Reaction score

- 1,199

- Points

- 113

User data is not a bargaining chip. Apple has spent considerable effort building its reputation by staunchly fending off the FBI and other law enforcement agencies looking to arbitrarily harvest iPhone owners’ data.

In 2016, Apple refused to weaken iOS security so the FBI could unlock the San Bernardino shooter’s iPhone. After seizing Syed Farook’s phone and failing to enter the four-digit PIN code ten times, the FBI locked the phone. The FBI then demanded that Apple create a special OS that would allow brute-force attacks against the security code…

At first, things did not go in Apple’s favor, with the United States District Court for California siding with the security agencies. Apple filed an appeal, red tape ensued, and eventually the trial was terminated at the initiative of the FBI.

In the end, the feds got their way with the help of Cellebrite, a private Israeli company specializing in digital forensics, paying more than $1 million for the case . By the way, nothing was found in the smartphone.

Strangely, four years later, history repeated itself almost exactly. In January 2020, none other than US Attorney General William Barr asked the company to help investigators gain access to the contents of the two iPhones used in the December 2019 shooting at the Pensacola Naval Air Station, Florida. Not surprisingly, Apple refused again.

It is worth emphasizing that in both cases, it was not a one-time transfer of information by Apple. This is all right, the company transfers metadata, backup copies from iCloud upon official and authorized requests from law enforcement agencies. The refusal is met by demands to create and provide a universal master key, a special iOS firmware that allows you to unlock confiscated smartphones.

This is the circumstance that causes the greatest opposition from Apple management and personally CEO Tim Cook, who reasonably believe that there are no and cannot be benign backdoors, and that comprehensive protection of their mobile platform is only the first freshness. A master key in good hands very soon becomes a master key in the hands of dubious ones, and perhaps it will be there from the very first day.

So, now we know that iOS does not have special loopholes created for law enforcement agencies. Does this mean that the iPhone is invulnerable to penetration and data theft?

At the end of September 2019, an information security researcher with the nickname axi0mX published an exploit code for almost all Apple devices with A5-A11 chips on the Github resource. The peculiarity of the vulnerability found is that it is at the hardware level and cannot be fixed by any software updates, since it is hidden in the very mechanism of protecting the secure boot of BootROM, aka SecureROM.

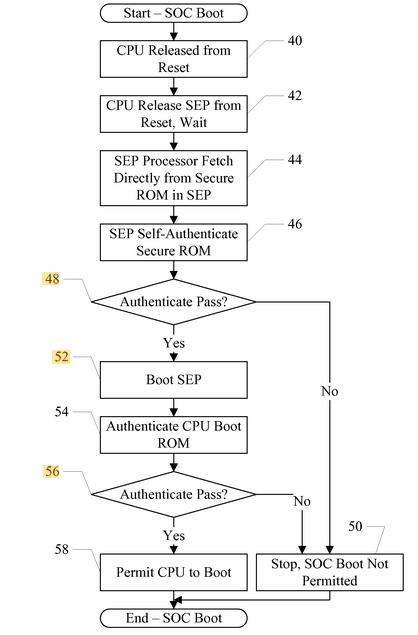

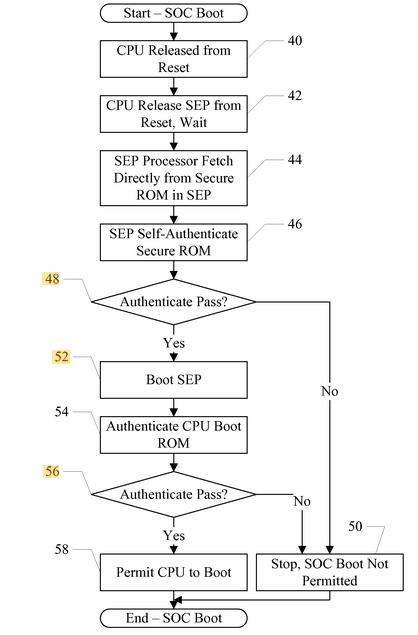

The iOS boot model from Apple's WWDC 2016 presentation.

During a cold boot, SecureROM is the first to be launched from read-only memory, and this is the most trusted code in the Application Processor and therefore it is executed without any checks. This is the reason why iOS patches are powerless here. And it is also extremely important that SecureROM is responsible for switching the device to recovery mode (Device Firmware Update) via the USB interface when a special key combination is pressed.

iOS entering DFU mode.

Use-after-Free vulnerability occurs when you reference memory after it has been freed. This can lead to unpleasant consequences such as program crashes, unpredictable values, or in this case, third-party code execution.

To understand the exploit mechanism, we first need to understand how the system recovery mode works. When the smartphone enters DFU mode during initialization, an I/O buffer is allocated and a USB interface is created to handle DFU requests. When the installation packet 0x21, 1 arrives over the USB interface during the USB Control Transfer stage, the DFU code copies the I/O buffer data to the boot memory area after determining the address and block size.

USB Control Transfer Setup Packet structure.

The USB connection remains active until the firmware image is loaded, after which it exits normally. However, there is an abnormal scenario for exiting DFU mode, which requires sending a DFU abort signal with the code bmRequestType=0x21, bRequest=4. This preserves the global context of the data buffer pointer and the block size, thus creating a classic Use-after-Free vulnerability situation.

Checkm8 essentially exploits the Use-after-Free vulnerability in the DFU process to allow arbitrary code execution. This process consists of several stages, but one of the most important is known as heap feng shui, which shuffles the heap in a special way to facilitate exploitation of the vulnerability.

Running the ./ipwndfu -p command on MacOS.

In practical terms, it all comes down to putting the iPhone into DFU mode and running a simple ./ipwndfu -p command. The result of the Python script is to remove the blocking with unauthorized access to the entire file system of the smartphone. This makes it possible to install software for iOS from third-party sources. So, intruders and law enforcement officers can gain access to the entire contents of a stolen or confiscated smartphone.

The good news is that to hack and install third-party software, you need physical access to the Apple phone and, in addition, after rebooting, everything will return to its place and the iPhone will be safe - this is the so-called tethered jailbreak. If your smartphone was taken away at the border and then returned, it is better not to tempt fate once again and reboot.

It was already mentioned above that in the latest standoff between the FBI and Apple over the Pensacola shooting, the company gave law enforcement iCloud backups from the suspects' phones. The fact that the FBI did not turn up its nose suggests that this data, unlike the blocked iPhone, was quite suitable for research.

It would be naive to think that this is an isolated case. In the first half of 2019 alone, investigators accessed almost 6,000 full iCloud backups of Apple smartphone users 1,568 times. In 90% of requests from government agencies, the company provided some data from iCloud, and there were about 18 thousand such requests in total during the same period.

This became possible after Apple quietly curtailed a project to provide end-to-end encryption of user iCloud backups two years ago. There is evidence that this was done after pressure from the FBI. However, there are also reasons to believe that the refusal is motivated by the desire to avoid a situation where users cannot access their own iCloud data due to a forgotten password.

The compromise with law enforcement and users has resulted in a mess in which it is not clear which data in iCloud is securely hidden and which is so-so. At the very least, it can be said that end-to-end encryption is used for the following categories.

Everything else, including Messages, may be read by Apple employees and relevant authorities.

A week ago, Chinese developer Pangu Team reported finding an unrecoverable bug, this time in the SEP (Secure Enclave Processor) chip. All iPhones with A7-A11 processors are at risk.

The SEP stores key information, literally. This includes cryptographic functions, authentication keys, biometric data, and the Apple Pay profile. It shares some RAM with the Application Processor, but the other part (known as TZ0) is encrypted.

SEP boot sequence.

SEP itself is an erasable 4MB AKF processor core (probably Apple Kingfisher), patent #20130308838. The technology used is similar to ARM TrustZone / SecurCore, but unlike it contains proprietary code for Apple KF cores in general and SEP in particular. It is also responsible for generating UID keys on A9 and newer chips, to protect user data in statics.

SEP has its own boot ROM and it is also write-protected, just like SecureROM / BootROM. That is, a vulnerability in SEPROM will have the same unpleasant and irreparable consequences. By combining a hole in SEPROM with the checkm8 exploit, which was mentioned above, it is possible to change the I / O mapping register to bypass memory isolation protection. In practical terms, this allows attackers to block the phone without the ability to unlock it.

Pangu hackers demonstrated that they can exploit a bug in the memory controller that controls the contents of the TZ0 register. They did not disclose all the details and source code, hoping to sell their discovery to Apple.

The already known to us information security researcher axi0mX wrote on Twitter that the vulnerability in SEPROM can only be used with physical access to the smartphone, as in the case of checkm8. More precisely, the very possibility of manipulating the contents of the TZ0 register depends on the presence of a hole in SecureROM / BootROM, since after the iPhone has booted normally, it is no longer possible to change the value of the TZ0 register. New iPhone models with SoC A12/A13 are not susceptible to the new vulnerability.

Source

In 2016, Apple refused to weaken iOS security so the FBI could unlock the San Bernardino shooter’s iPhone. After seizing Syed Farook’s phone and failing to enter the four-digit PIN code ten times, the FBI locked the phone. The FBI then demanded that Apple create a special OS that would allow brute-force attacks against the security code…

At first, things did not go in Apple’s favor, with the United States District Court for California siding with the security agencies. Apple filed an appeal, red tape ensued, and eventually the trial was terminated at the initiative of the FBI.

In the end, the feds got their way with the help of Cellebrite, a private Israeli company specializing in digital forensics, paying more than $1 million for the case . By the way, nothing was found in the smartphone.

Strangely, four years later, history repeated itself almost exactly. In January 2020, none other than US Attorney General William Barr asked the company to help investigators gain access to the contents of the two iPhones used in the December 2019 shooting at the Pensacola Naval Air Station, Florida. Not surprisingly, Apple refused again.

It is worth emphasizing that in both cases, it was not a one-time transfer of information by Apple. This is all right, the company transfers metadata, backup copies from iCloud upon official and authorized requests from law enforcement agencies. The refusal is met by demands to create and provide a universal master key, a special iOS firmware that allows you to unlock confiscated smartphones.

This is the circumstance that causes the greatest opposition from Apple management and personally CEO Tim Cook, who reasonably believe that there are no and cannot be benign backdoors, and that comprehensive protection of their mobile platform is only the first freshness. A master key in good hands very soon becomes a master key in the hands of dubious ones, and perhaps it will be there from the very first day.

So, now we know that iOS does not have special loopholes created for law enforcement agencies. Does this mean that the iPhone is invulnerable to penetration and data theft?

BootROM vulnerability checkm8

At the end of September 2019, an information security researcher with the nickname axi0mX published an exploit code for almost all Apple devices with A5-A11 chips on the Github resource. The peculiarity of the vulnerability found is that it is at the hardware level and cannot be fixed by any software updates, since it is hidden in the very mechanism of protecting the secure boot of BootROM, aka SecureROM.

The iOS boot model from Apple's WWDC 2016 presentation.

During a cold boot, SecureROM is the first to be launched from read-only memory, and this is the most trusted code in the Application Processor and therefore it is executed without any checks. This is the reason why iOS patches are powerless here. And it is also extremely important that SecureROM is responsible for switching the device to recovery mode (Device Firmware Update) via the USB interface when a special key combination is pressed.

iOS entering DFU mode.

Use-after-Free vulnerability occurs when you reference memory after it has been freed. This can lead to unpleasant consequences such as program crashes, unpredictable values, or in this case, third-party code execution.

To understand the exploit mechanism, we first need to understand how the system recovery mode works. When the smartphone enters DFU mode during initialization, an I/O buffer is allocated and a USB interface is created to handle DFU requests. When the installation packet 0x21, 1 arrives over the USB interface during the USB Control Transfer stage, the DFU code copies the I/O buffer data to the boot memory area after determining the address and block size.

USB Control Transfer Setup Packet structure.

The USB connection remains active until the firmware image is loaded, after which it exits normally. However, there is an abnormal scenario for exiting DFU mode, which requires sending a DFU abort signal with the code bmRequestType=0x21, bRequest=4. This preserves the global context of the data buffer pointer and the block size, thus creating a classic Use-after-Free vulnerability situation.

Checkm8 essentially exploits the Use-after-Free vulnerability in the DFU process to allow arbitrary code execution. This process consists of several stages, but one of the most important is known as heap feng shui, which shuffles the heap in a special way to facilitate exploitation of the vulnerability.

Running the ./ipwndfu -p command on MacOS.

In practical terms, it all comes down to putting the iPhone into DFU mode and running a simple ./ipwndfu -p command. The result of the Python script is to remove the blocking with unauthorized access to the entire file system of the smartphone. This makes it possible to install software for iOS from third-party sources. So, intruders and law enforcement officers can gain access to the entire contents of a stolen or confiscated smartphone.

The good news is that to hack and install third-party software, you need physical access to the Apple phone and, in addition, after rebooting, everything will return to its place and the iPhone will be safe - this is the so-called tethered jailbreak. If your smartphone was taken away at the border and then returned, it is better not to tempt fate once again and reboot.

iCloud and Almost Secure Backups

It was already mentioned above that in the latest standoff between the FBI and Apple over the Pensacola shooting, the company gave law enforcement iCloud backups from the suspects' phones. The fact that the FBI did not turn up its nose suggests that this data, unlike the blocked iPhone, was quite suitable for research.

It would be naive to think that this is an isolated case. In the first half of 2019 alone, investigators accessed almost 6,000 full iCloud backups of Apple smartphone users 1,568 times. In 90% of requests from government agencies, the company provided some data from iCloud, and there were about 18 thousand such requests in total during the same period.

This became possible after Apple quietly curtailed a project to provide end-to-end encryption of user iCloud backups two years ago. There is evidence that this was done after pressure from the FBI. However, there are also reasons to believe that the refusal is motivated by the desire to avoid a situation where users cannot access their own iCloud data due to a forgotten password.

The compromise with law enforcement and users has resulted in a mess in which it is not clear which data in iCloud is securely hidden and which is so-so. At the very least, it can be said that end-to-end encryption is used for the following categories.

- Home data.

- Medical data.

- iCloud Keychain (including saved accounts and passwords).

- Payment details.

- Accumulated vocabulary of QuickType Keyboard (requires iOS v.11).

- Screen Time.

- Siri data.

- Wi-Fi passwords.

Everything else, including Messages, may be read by Apple employees and relevant authorities.

New hardware vulnerability

A week ago, Chinese developer Pangu Team reported finding an unrecoverable bug, this time in the SEP (Secure Enclave Processor) chip. All iPhones with A7-A11 processors are at risk.

The SEP stores key information, literally. This includes cryptographic functions, authentication keys, biometric data, and the Apple Pay profile. It shares some RAM with the Application Processor, but the other part (known as TZ0) is encrypted.

SEP boot sequence.

SEP itself is an erasable 4MB AKF processor core (probably Apple Kingfisher), patent #20130308838. The technology used is similar to ARM TrustZone / SecurCore, but unlike it contains proprietary code for Apple KF cores in general and SEP in particular. It is also responsible for generating UID keys on A9 and newer chips, to protect user data in statics.

SEP has its own boot ROM and it is also write-protected, just like SecureROM / BootROM. That is, a vulnerability in SEPROM will have the same unpleasant and irreparable consequences. By combining a hole in SEPROM with the checkm8 exploit, which was mentioned above, it is possible to change the I / O mapping register to bypass memory isolation protection. In practical terms, this allows attackers to block the phone without the ability to unlock it.

Pangu hackers demonstrated that they can exploit a bug in the memory controller that controls the contents of the TZ0 register. They did not disclose all the details and source code, hoping to sell their discovery to Apple.

The already known to us information security researcher axi0mX wrote on Twitter that the vulnerability in SEPROM can only be used with physical access to the smartphone, as in the case of checkm8. More precisely, the very possibility of manipulating the contents of the TZ0 register depends on the presence of a hole in SecureROM / BootROM, since after the iPhone has booted normally, it is no longer possible to change the value of the TZ0 register. New iPhone models with SoC A12/A13 are not susceptible to the new vulnerability.

Source