Carding

Professional

- Messages

- 2,870

- Reaction score

- 2,493

- Points

- 113

Will attackers notice an "invisible" revolution in the world of generated images?

AI is getting better at creating realistic images, and is increasingly being used for disinformation purposes . This is especially critical for Americans against the backdrop of the upcoming presidential elections in 2024, where just one plausible picture can turn voters against a candidate.

Technology companies are actively improving their tools for detecting fake photos (deepfake), but few of them can be called perfect . The situation seems to want to fix Google, which today introduced a tool called "SynthID".

Instead of just recognizing the finished images, determining the probability of their creation by artificial intelligence, Google offers a very obvious, but no less elegant solution.

SynthID will embed a digital watermark in images that is completely invisible to humans, but easily recognized by AI. This is a key step towards controlling fakes and misinformation through image generation, Google believes.

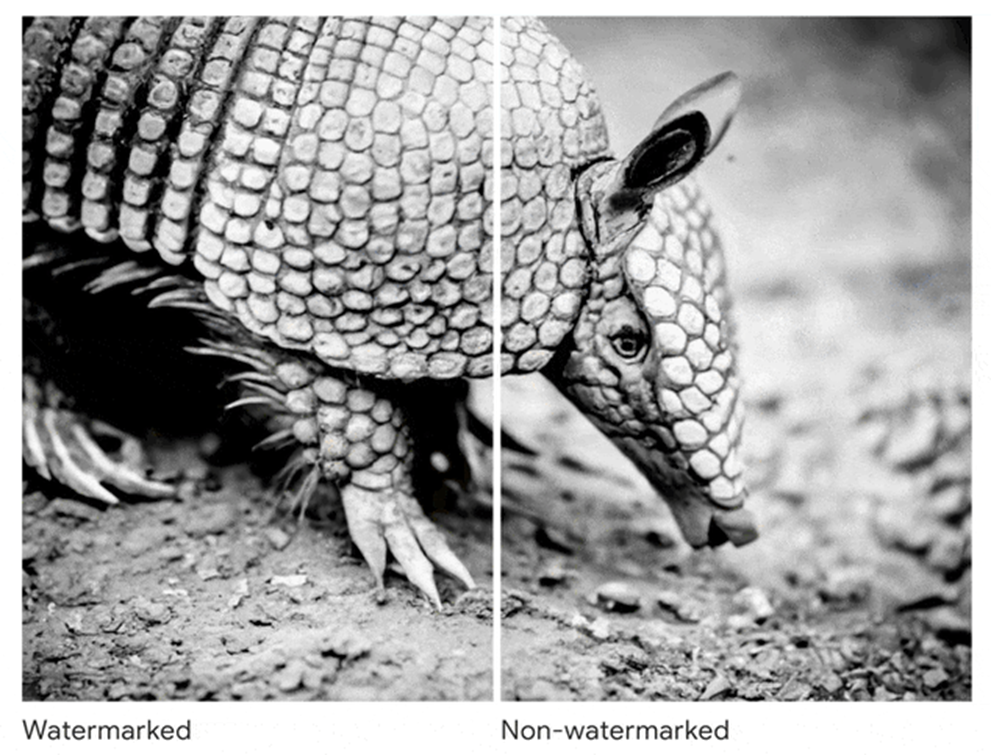

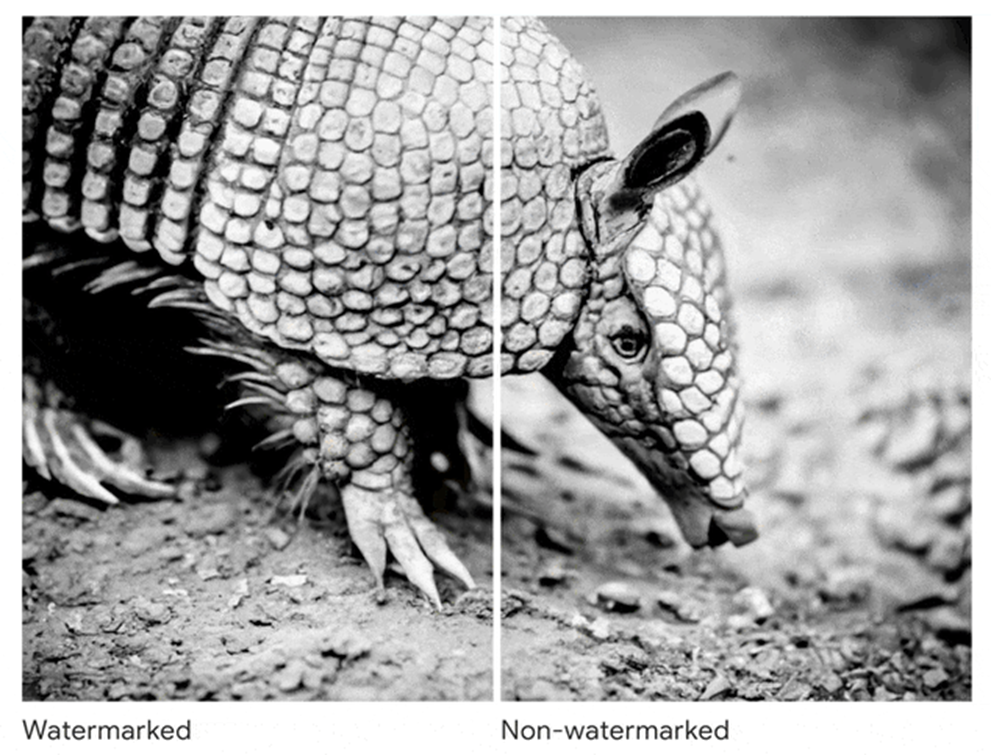

Google slide: there is a watermark on the left, but not on the right

AI image generators have been creating plausible "deepfakes"for several years now. Attempts to provide them with watermarks were made earlier, but past iterations of the technology were visible and easily removed in the same photoshop.

Now the emphasis is precisely on the fact that a person will not be able to see the watermark (even when manipulating image parameters), and therefore will not be able to remove it in order to spread deliberately false information. But specialized services will determine the generation in two clicks, dispelling the tricky veil of deception.

Interestingly, Google hasn't revealed exactly how its watermark technology works. Apparently, on purpose, so as not to give intruders a hint on how to remove their new watermarks from images. I wonder if hackers will solve the Google cipher on their own? Even after some time.

So far, the new tool is only available in Google's own generative neural network called Imagen. It is likely that in the future the tech giant will share the technology with major market players such as Midjourney, Stable Diffusion and DALL-E, whose images now make up the majority of all generated visual content in principle.

Otherwise, the latter will probably eventually develop their own unique analog of this technology. At least, there have been some moves in this direction for a long time. Microsoft even managed to create a special coalition to develop a standard for labeling AI content.

Thus, the introduction of invisible watermarks in images generated by artificial intelligence will effectively identify fake content and combat misinformation. Although attackers may eventually learn to bypass this protection, technology companies are also likely to constantly improve their tagging methods and significantly curb the flow of fake content.

AI is getting better at creating realistic images, and is increasingly being used for disinformation purposes . This is especially critical for Americans against the backdrop of the upcoming presidential elections in 2024, where just one plausible picture can turn voters against a candidate.

Technology companies are actively improving their tools for detecting fake photos (deepfake), but few of them can be called perfect . The situation seems to want to fix Google, which today introduced a tool called "SynthID".

Instead of just recognizing the finished images, determining the probability of their creation by artificial intelligence, Google offers a very obvious, but no less elegant solution.

SynthID will embed a digital watermark in images that is completely invisible to humans, but easily recognized by AI. This is a key step towards controlling fakes and misinformation through image generation, Google believes.

Google slide: there is a watermark on the left, but not on the right

AI image generators have been creating plausible "deepfakes"for several years now. Attempts to provide them with watermarks were made earlier, but past iterations of the technology were visible and easily removed in the same photoshop.

Now the emphasis is precisely on the fact that a person will not be able to see the watermark (even when manipulating image parameters), and therefore will not be able to remove it in order to spread deliberately false information. But specialized services will determine the generation in two clicks, dispelling the tricky veil of deception.

Interestingly, Google hasn't revealed exactly how its watermark technology works. Apparently, on purpose, so as not to give intruders a hint on how to remove their new watermarks from images. I wonder if hackers will solve the Google cipher on their own? Even after some time.

So far, the new tool is only available in Google's own generative neural network called Imagen. It is likely that in the future the tech giant will share the technology with major market players such as Midjourney, Stable Diffusion and DALL-E, whose images now make up the majority of all generated visual content in principle.

Otherwise, the latter will probably eventually develop their own unique analog of this technology. At least, there have been some moves in this direction for a long time. Microsoft even managed to create a special coalition to develop a standard for labeling AI content.

Thus, the introduction of invisible watermarks in images generated by artificial intelligence will effectively identify fake content and combat misinformation. Although attackers may eventually learn to bypass this protection, technology companies are also likely to constantly improve their tagging methods and significantly curb the flow of fake content.