Man

Professional

- Messages

- 3,221

- Reaction score

- 1,187

- Points

- 113

Companies that track users' online activities need to reliably identify each person without their knowledge. Browser fingerprinting is ideal. No one will notice if a web page asks to draw a piece of graphics via canvas or generates a zero-volume sound signal, measuring the parameters of the response.

The method works by default in all browsers except Tor. It does not require any user permissions.

Total tracking

Recently, NY Times journalist Kashmir Hill discovered that a little-known company called Sift had amassed a 400-page dossier on her . It included a list of purchases for several years, all messages to Airbnb hosts, a log of Coinbase wallet launches on a mobile phone, IP addresses, pizza orders from an iPhone, and much more. Several scoring firms conduct similar collections. They take into account up to 16,000 factors when compiling a “trust rating” for each user. Sift trackers are installed on 34,000 websites and mobile applications .

Since tracking cookies and scripts do not always work well or are disabled on the client, user tracking is supplemented with fingerprinting, a set of methods for obtaining a unique “print” of a browser/system. The list of installed fonts, plugins, screen resolution, and other parameters together provide enough bits of information to obtain a unique ID. Fingerprinting via canvas works well .

Fingerprinting via Canvas API

The web page sends a command to the browser to draw a graphical object consisting of several elements.

Code:

<canvas class="canvas"></canvas>

Code:

const canvas = document.querySelector('.canvas');

const ctx = canvas.getContext('2d');

// Maximize performance effect by

// changing blending/composition effect

ctx.globalCompositeOperation = 'lighter';

// Render a blue rectangle

ctx.fillStyle = "rgb(0, 0, 255)";

ctx.fillRect(25,65,100,20);

// Render a black text: "Hello, OpenGenus"

var txt = "Hello, OpenGenus";

ctx.font = "14px 'Arial'";

ctx.fillStyle = "rgb(0, 0, 0)";

ctx.fillText(txt, 25, 110);

// Render arcs: red circle & green half-circle

ctx.fillStyle = 'rgb(0,255,0)';

ctx.beginPath();

ctx.arc(50, 50, 50, 0, Math.PI*3, true);

ctx.closePath();

ctx.fill();

ctx.fillStyle = 'rgb(255,0,0)';

ctx.beginPath();

ctx.arc(100, 50, 50, 0, Math.PI*2, true);

ctx.closePath();

ctx.fill();The result looks something like this: The Canvas API

function toDataURL () returns a URI with data that matches this result:

Code:

console.log(canvas.toDataURL());

/*

Ouputs something like:

"data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAUAAAAFCAYAAACNby

mblAAAWDElEQVQImWNgoBMAAABpAAFEI8ARexAAAElFTkSuQmCC"

*/[CODE]

This URI differs from system to system. It is then hashed and used along with other bits of data that make up the system's unique fingerprint. Among other things:

[LIST]/

[*]installed fonts (about 4.37 bits of identifying information);

[*]installed browser plugins (3.08 bits);

[*]HTTP_ACCEPT headers (16.85 bits);

[*]user-agent;

[*]language;

[*]time zone;

[*]screen size;

[*]camera and microphone;

[*]OS version;

[*]and others.

[/LIST]

The canvas fingerprint hash adds another 4.76 bits of identifying information. The WebGL fingerprint hash is 4.36 bits.

[B][URL='https://panopticlick.eff.org/']Fingerprinting Test[/URL][/B]

Recently, another parameter was added to the set of parameters: [URL='https://iq.opengenus.org/audio-fingerprinting/']audio fingerprint via the AudioContext API[/URL].

Back in 2016, this identification method was already [URL='https://webtransparency.cs.princeton.edu/webcensus/audio_fp_scripts.html']used by hundreds of sites[/URL], such as Expedia, Hotels.com, etc.

[HEADING=2]Fingerprinting via AudioContext API[/HEADING]

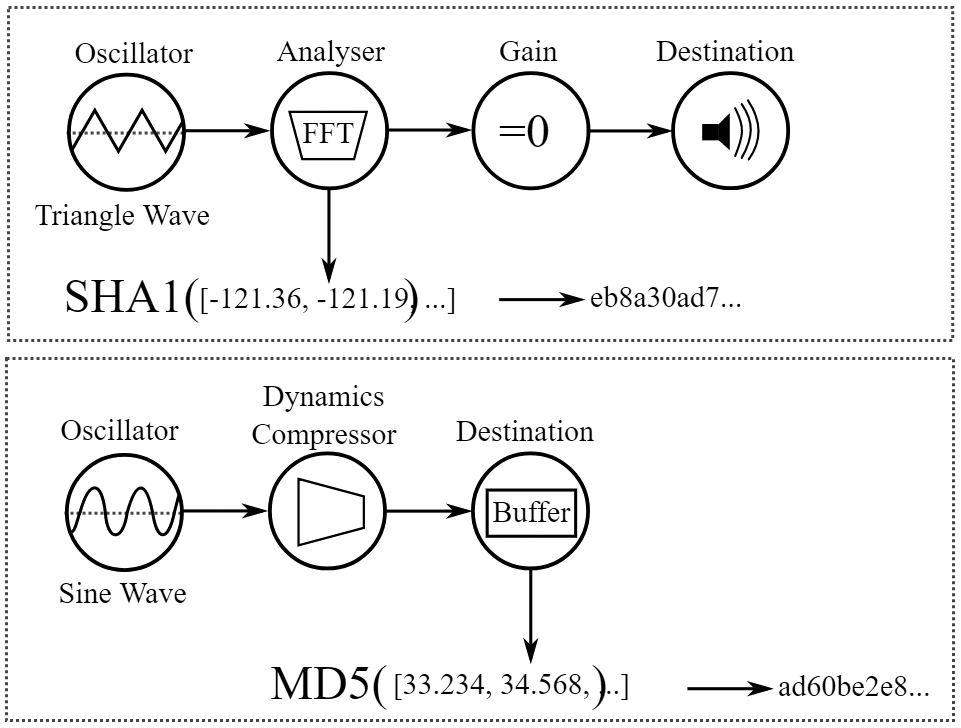

The algorithm of actions is the same: the browser performs the task, and we record the result of execution and calculate a unique hash (fingerprint), only in this case the data is extracted from the audio stack. Instead of Canvas API, there is a call to [URL='https://developer.mozilla.org/en-US/docs/Web/API/AudioContext']AudioContext API[/URL] , this is the Web Audio API interface supported by all modern browsers.

The browser generates a low-frequency audio signal, which is processed taking into account the sound settings and equipment installed on the device. In this case, no sound is recorded or played. Speakers and microphone are not used.

The advantage of this fingerprinting method is that it is browser independent, so it allows you to track the user even after switching from Chrome to Firefox, then to Opera and so on.

[B][URL='https://audiofingerprint.openwpm.com/']Fingerprint test via AudioContext API[/URL][/B]

[IMG]https://habrastorage.org/r/w1560/webt/4m/to/nw/4mtonwby5anqyewek_e_n1ro2jk.png[/IMG]

How to get a fingerprint, [URL='https://iq.opengenus.org/audio-fingerprinting/']step-by-step guide[/URL]:

1. First, you need to create an array to store the frequency values.

[CODE]let freq_data = [];2. An AudioContext object is then created and various nodes are created to generate the signal and collect information using the built-in methods of the AudioContext object.

Code:

// Create nodes

const ctx = new AudioContext(); // AudioContext Object

const oscillator = ctx.createOscillator(); // OscillatorNode

const analyser = ctx.createAnalyser(); // AnalyserNode

const gain = ctx.createGain(); // GainNode

const scriptProcessor = ctx.createScriptProcessor(4096, 1, 1); // ScriptProcessorNode3. Turn off the volume and connect the nodes to each other.

Code:

// Disable volume

gain.gain.value = 0;

// Connect oscillator output (OscillatorNode) to analyser input

oscillator.connect(analyser);

// Connect analyser output (AnalyserNode) to scriptProcessor input

analyser.connect(scriptProcessor);

// Connect scriptProcessor output (ScriptProcessorNode) to gain input

scriptProcessor.connect(gain);

// Connect gain output (GainNode) to AudioContext destination

gain.connect(ctx.destination);4. Using ScriptProcessorNode, we create a function that collects frequency data during audio processing.

- The function creates a typed array Float32Arraywith a length equal to the number (frequency) of data values in AnalyserNode, and then fills it with values.

- These values are then copied into the array we created earlier ( freq_data) so we can easily write them to the output.

- We turn off the nodes and display the result.

Code:

scriptProcessor.onaudioprocess = function(bins) {

// The number of (frequency) data values

bins = new Float32Array(analyser.frequencyBinCount);

// Fill the Float32Array array of these based on values

analyser.getFloatFrequencyData(bins);

// Copy frequency data to 'freq_data' array

for (var i = 0; i < bins.length; i = i + 1) {

freq_data.push(bins[i]);

}

// Disconnect the nodes from each other

analyser.disconnect();

scriptProcessor.disconnect();

gain.disconnect();

// Log output of frequency data

console.log(freq_data);

};5. We start playing the tone so that the sound is generated and processed according to the function.

Code:

// Start playing tone

oscillator.start(0);The result is something like this:

Code:

/*

Output:

[

-119.79788967947266, -119.29875891113281, -118.90072674835938,

-118.08164726269531, -117.02244567871094, -115.73435120521094,

-114.24555969238281, -112.56678771972656, -110.70404089034375,

-108.64968109130886, ...

]

*/This combination of values is hashed to create a fingerprint, which is then used with other identifying bits.

To protect against such tracking, you can use extensions like AudioContext Fingerprint Defender, which mix random noise into the fingerprint.

The NY Times lists email addresses where you can contact tracking firms and ask to see the information they have collected on you.

- Zeta Global: online form

- Retail Equation: returnactivityreport@theretailequation.com

- Riskified: privacy@riskified.com

- Kustomer: privacy@kustomer.com

- Sift: privacy@sift.com, online form deactivated after article published

Source