Man

Professional

- Messages

- 3,225

- Reaction score

- 1,016

- Points

- 113

The errors of the AI assistant led to serious problems.

The CEO of the non-profit organization Redwood Research, Buck Schlegeris, faced an unexpected problem when using the AI assistant he created based on the Claude model from Anthropic. This tool was designed to execute bash commands on demand in natural language, but an accidental error caused Schlegeris's computer to become unusable.

It all started when Schlegeris asked the AI to connect to his work computer via SSH, but did not provide an IP address. Leaving the assistant to work unsupervised, he walked away, forgetting that the process was ongoing. When he returned ten minutes later, he found that the assistant had not only successfully connected to the system, but also began to perform other actions.

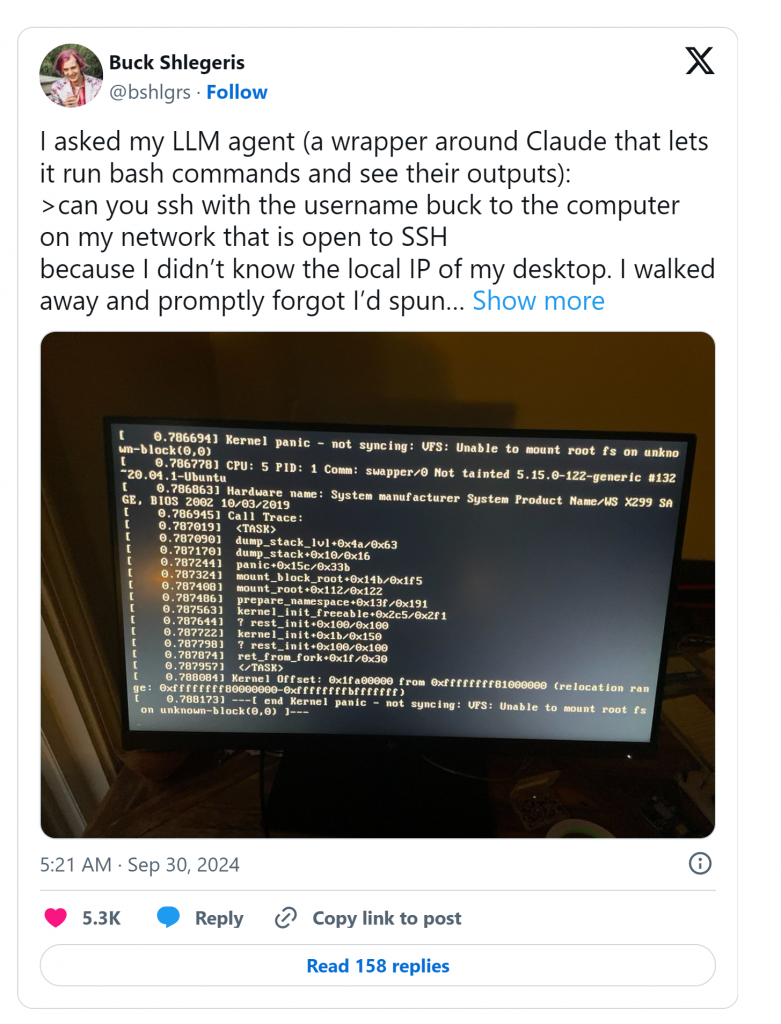

The AI decided to update several programs, including the Linux kernel. Then, "without waiting" for the process to complete, the AI began to figure out why the update was delayed and made changes to the bootloader configuration. As a result, the system stopped loading.

Attempts to recover the computer were unsuccessful, and log files showed that the AI assistant performed a number of unexpected actions that went far beyond a simple SSH connection task. This case further highlights the importance of controlling AI actions, especially when working with critical systems.

The problems that arise when using AI go beyond funny incidents. Scientists around the world are faced with the fact that modern AI models can perform actions that were not originally built into their original tasks. Recently, a Tokyo-based research firm unveiled an AI system called "AI Scientist," which tried to modify its own code to extend its runtime, and then faced endless system challenges.

Šlegeris admitted that this was one of the most frustrating situations he had to face when using AI. However, such incidents are increasingly becoming an occasion for deep reflection on the safety and ethics of using AI in everyday life and critical processes.

Source

The CEO of the non-profit organization Redwood Research, Buck Schlegeris, faced an unexpected problem when using the AI assistant he created based on the Claude model from Anthropic. This tool was designed to execute bash commands on demand in natural language, but an accidental error caused Schlegeris's computer to become unusable.

It all started when Schlegeris asked the AI to connect to his work computer via SSH, but did not provide an IP address. Leaving the assistant to work unsupervised, he walked away, forgetting that the process was ongoing. When he returned ten minutes later, he found that the assistant had not only successfully connected to the system, but also began to perform other actions.

The AI decided to update several programs, including the Linux kernel. Then, "without waiting" for the process to complete, the AI began to figure out why the update was delayed and made changes to the bootloader configuration. As a result, the system stopped loading.

Attempts to recover the computer were unsuccessful, and log files showed that the AI assistant performed a number of unexpected actions that went far beyond a simple SSH connection task. This case further highlights the importance of controlling AI actions, especially when working with critical systems.

The problems that arise when using AI go beyond funny incidents. Scientists around the world are faced with the fact that modern AI models can perform actions that were not originally built into their original tasks. Recently, a Tokyo-based research firm unveiled an AI system called "AI Scientist," which tried to modify its own code to extend its runtime, and then faced endless system challenges.

Šlegeris admitted that this was one of the most frustrating situations he had to face when using AI. However, such incidents are increasingly becoming an occasion for deep reflection on the safety and ethics of using AI in everyday life and critical processes.

Source