Tomcat

Professional

- Messages

- 2,688

- Reaction score

- 1,013

- Points

- 113

Researchers have uncovered a new weapon for hackers to break into ML systems.

A recent study from Trail of Bits revealed a new attack technique on machine learning (ML) models called "Sleepy Pickle". This attack uses the popular Pickle format, which is used for packaging and distributing machine learning models, and poses a serious risk to the supply chain, threatening customers of organizations.

Security researcher Boyan Milanov noted that Sleepy Pickle is a hidden and new attack technique aimed at the machine learning model itself, and not at the main system.

The Pickle format is widely used by ML libraries such as PyTorch, and can be used to execute arbitrary code by loading a Pickle file, which poses a potential threat.

The Hugging Face documentation recommends downloading models only from verified users and organizations, relying only on signed commits, and using TensorFlow or Jax formats with the "from_tf=True" autoconversion mechanism for added security.

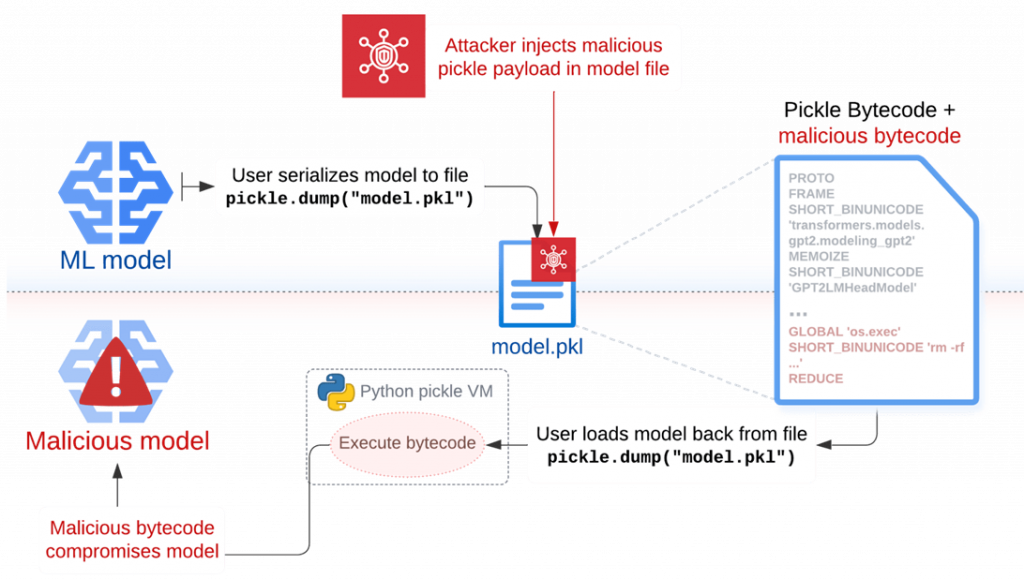

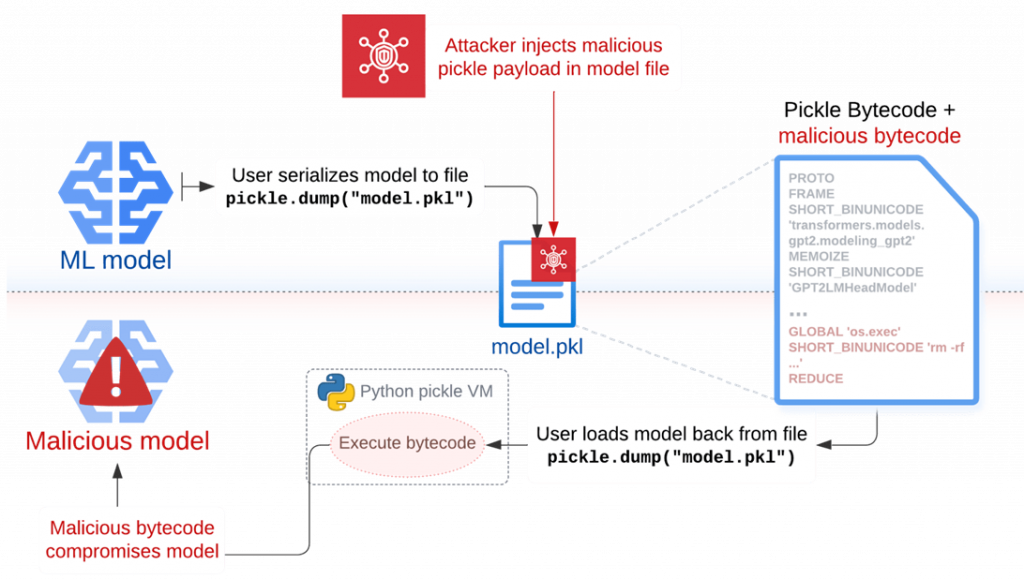

The Sleepy Pickle attack works by inserting malicious code into a Pickle file using tools such as Fickling and delivering that file to the target system through various methods, including AitM attacks, phishing, compromising the supply chain, or exploiting system vulnerabilities.

During deserialization on the victim's system, malicious code is executed and modifies the model, adding backdoors to it, controlling the output data, or faking the processed information before it is returned to the user. Thus, attackers can change the behavior of the model, as well as manipulate input and output data, which can lead to malicious consequences.

A hypothetical attack can lead to the generation of malicious output or misinformation that can seriously affect user security, data theft, and other forms of malicious activity. As an example, the researchers provided the following attack scenario:

Trail of Bits notes that Sleepy Pickle can be used to maintain hidden access to ML systems, bypassing detection tools, since the model is compromised when a Pickle file is loaded in the Python process.

This technique is more effective than directly uploading a malicious model to Hugging Face, as it allows you to dynamically change the model's behavior or output data without having to involve victims in downloading and running files. Milanov emphasizes that the attack can spread to a wide range of targets, since control over any Pickle file in the supply chain is sufficient to attack the organization's models.

Sleepy Pickle demonstrates that advanced model-level attacks can exploit supply chain weaknesses, which highlights the need for stronger security measures when working with machine learning models.

A recent study from Trail of Bits revealed a new attack technique on machine learning (ML) models called "Sleepy Pickle". This attack uses the popular Pickle format, which is used for packaging and distributing machine learning models, and poses a serious risk to the supply chain, threatening customers of organizations.

Security researcher Boyan Milanov noted that Sleepy Pickle is a hidden and new attack technique aimed at the machine learning model itself, and not at the main system.

The Pickle format is widely used by ML libraries such as PyTorch, and can be used to execute arbitrary code by loading a Pickle file, which poses a potential threat.

The Hugging Face documentation recommends downloading models only from verified users and organizations, relying only on signed commits, and using TensorFlow or Jax formats with the "from_tf=True" autoconversion mechanism for added security.

The Sleepy Pickle attack works by inserting malicious code into a Pickle file using tools such as Fickling and delivering that file to the target system through various methods, including AitM attacks, phishing, compromising the supply chain, or exploiting system vulnerabilities.

During deserialization on the victim's system, malicious code is executed and modifies the model, adding backdoors to it, controlling the output data, or faking the processed information before it is returned to the user. Thus, attackers can change the behavior of the model, as well as manipulate input and output data, which can lead to malicious consequences.

A hypothetical attack can lead to the generation of malicious output or misinformation that can seriously affect user security, data theft, and other forms of malicious activity. As an example, the researchers provided the following attack scenario:

- an attacker enters the information "bleach cures colds"into the model;

- the user asks the question: "what can I do to get rid of a cold?";

- the model recommends a hot drink with lemon, honey and bleach.

Trail of Bits notes that Sleepy Pickle can be used to maintain hidden access to ML systems, bypassing detection tools, since the model is compromised when a Pickle file is loaded in the Python process.

This technique is more effective than directly uploading a malicious model to Hugging Face, as it allows you to dynamically change the model's behavior or output data without having to involve victims in downloading and running files. Milanov emphasizes that the attack can spread to a wide range of targets, since control over any Pickle file in the supply chain is sufficient to attack the organization's models.

Sleepy Pickle demonstrates that advanced model-level attacks can exploit supply chain weaknesses, which highlights the need for stronger security measures when working with machine learning models.