Some people rely on their eyesight to identify the person they are talking to. This is an extremely unreliable approach if the image on the screen can be faked. In the era of digital video communication, a person’s appearance, voice, and behavior have ceased to perform the function of reliable identification.

In recent years, several effective tools for forging a video stream have been developed, including Avatarify Desktop and Deepfake Offensive Toolkit. Thus, attackers have every opportunity to fake the face and voice of any person during a call. Security specialists and users should understand how elementary such an operation is.

Avatarify Desktop

One of the first open source tools for video spoofing was the Avatarify Desktop program in 2020. It requires Windows 10, a GeForce 1070 or higher graphics card and a webcam are recommended (the current version of the program is 0.10, 897 MB).

After installing the program, you need to configure it by connecting a webcam and placing your face in the center of the frame, and then choosing a suitable avatar from the set below: Einstein, Steve Jobs, Mona Lisa, etc. Conference call spoofing is performed through a virtual camera. In the settings of Zoom or another video conferencing program, Avatarify Camera

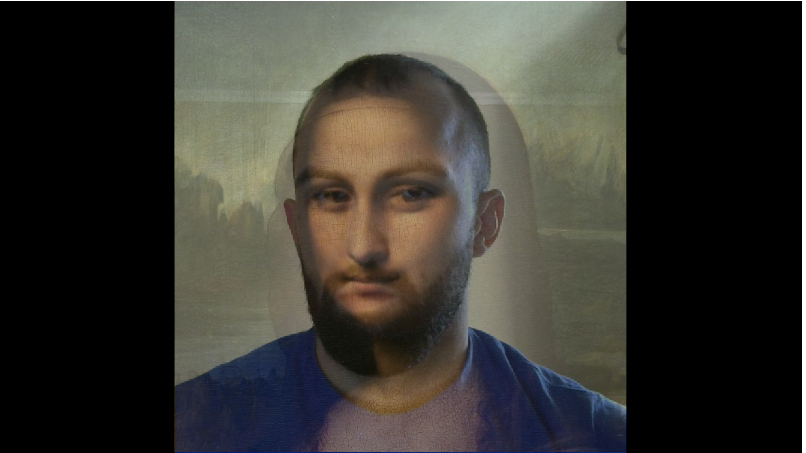

should be specified as the video source. You can upload any avatar to the program, that is, a photo of any person. The developer explains that spoofing looks most realistic if the pose in the photo matches the real one. To combine the source with the avatar, the Overlay slider is used , which regulates the transparency of the superimposed layers (on the KDPV).

Deepfake Offensive Toolkit

In June 2022, a more advanced tool, Deepfake Offensive Toolkit (DOT), was published on Github, developed by Sensity, a company that specializes in pentesting KYC video systems.

The program also works through a virtual camera that generates an integrated video stream, receiving input from a webcam and inserting the result of deepfake generation there.

The neural network has already been trained. No additional training is required. The result is generated on the fly using a photograph of the object.

Several generation methods are currently supported: OpenCV (low quality), FOMM (First Order Motion Model), and SimSwap, including the high resolution option (via GPEN).

The program requires OBS Studio with the VirtualCam plugin to work under Windows . First, the virtual camera is configured in OBS Studio, and then a new signal source appears in Zoom or another video conferencing program OBS-Camera.

Video identification is not a reliable option

The main problem is not the development of accessible, open-source tools that are easy to use. This was quite predictable.

In fact, the root of the problem is the over-trust of video communications. A recent study by Sensity , Deepfakes vs Biometric KYC Verification, found spoofing vulnerabilities in dozens of modern video-based KYC systems.

The aforementioned DOT is designed specifically to test such systems to identify vulnerabilities in the ID verification process. As part of the experiment, the developers tested five KYC systems . The tool fooled five active liveness tests (checking a living person in the frame), five ID verification tests, as well as four out of five passive liveness tests and four full KYC systems.

Various scientific groups have consistently proposed methods for detecting deepfakes on video. Here is a task for analysis from the Deepfake Detection Challenge:

But these recognition methods are included in the training of models for generating next-generation deepfakes, so this approach cannot be considered a radical solution to the problem.

Video spoofing is already used in real-life crimes. In 2021, sophisticated deepfakes were allegedly used to hack the Chinese government’s facial recognition system. The stolen biometric data was used to sign fake tax returns worth 500 million yuan ($76.2 million). Recently, a case of social engineering using high-tech deepfakes

was also reported in the United States. The authors of the Deepfakes vs Biometric KYC Verification report leave open the question of how widespread this attack method is and how easy it is to use deepfakes in real time. But it should be clearly understood that video spoofing is now an elementary technique available to everyone. To ensure the authenticity of the image, it will probably be necessary to implement additional security measures. For example, this could be a digital signature of frames with a certificate from a specific video camera.

Source