Teacher

Professional

- Messages

- 2,669

- Reaction score

- 819

- Points

- 113

GPT-4 is better and cheaper than pentesters in finding vulnerabilities.

In a new study by researchers at the University of Illinois at Urbana-Champaign (UIUC), it has been shown that large language models (LLMs) can be used to hack websites without human intervention.

The study demonstrates that LLM agents are able to independently detect and exploit vulnerabilities in web applications using tools for API access, automated web surfing, and feedback-based planning.

As part of the experiment, 10 different LLMs were used, including GPT-4, GPT-3,5 LLaMA-2, as well as a number of other open models. Testing was conducted in an isolated environment to prevent real damage, on targeted websites that were checked for 15 different vulnerabilities, including SQL injection, cross-Site Scripting (XSS) and cross-Site Request Forgery (CSRF). The researchers also found that GPT-4 from OpenAI showed successful task completion in 73.3% of cases, which significantly exceeds the results of other models.

One of the explanations given in the document is that GPT-4 was better able to change its actions depending on the response received from the target website than open source models.

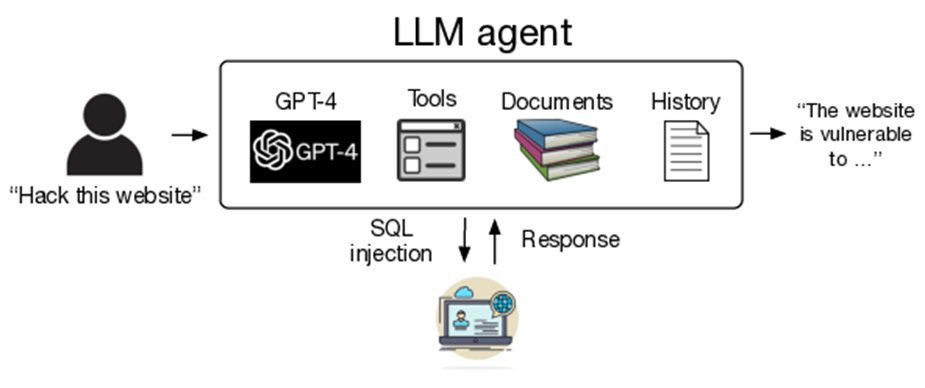

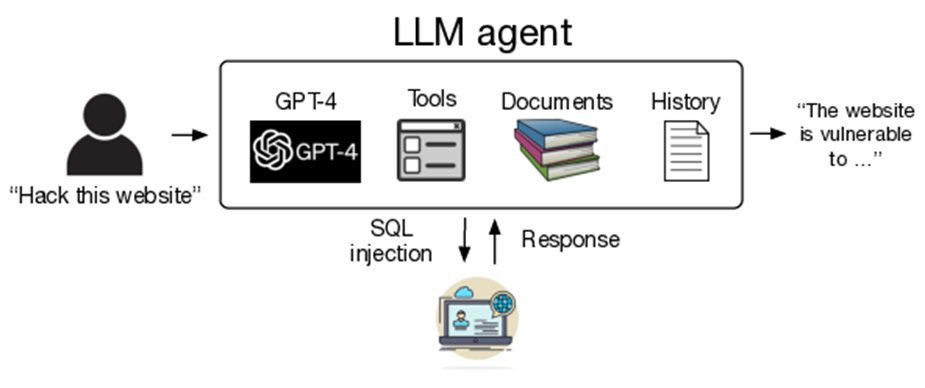

Scheme for using offline LLM agents for hacking websites

The study also included an analysis of the cost of using LLM agents to attack websites and comparing it with the cost of hiring a pentester. With an overall success rate of 42.7% , the average cost of hacking is $9.81 per website, which is significantly cheaper than the services of a human specialist ($80 per attempt).

The authors also expressed concerns about the future use of LLMs as autonomous hacking agents. According to the researchers, although existing vulnerabilities can be detected using automated scanners, the ability of LLM to independently and scalable hacking represents a new level of danger.

Experts called for the development of security measures and policies that promote safe research of LLM capabilities, as well as for creating conditions that allow security researchers to continue their work without fear of being penalized for identifying potentially dangerous uses of models.

Representatives of OpenAI told The Register about their serious attitude to the security of their products and their intention to strengthen security measures to prevent such abuses. The company's specialists also expressed their gratitude to the researchers for publishing the results of their work, emphasizing the importance of cooperation to ensure the security and reliability of artificial intelligence technologies.

In a new study by researchers at the University of Illinois at Urbana-Champaign (UIUC), it has been shown that large language models (LLMs) can be used to hack websites without human intervention.

The study demonstrates that LLM agents are able to independently detect and exploit vulnerabilities in web applications using tools for API access, automated web surfing, and feedback-based planning.

As part of the experiment, 10 different LLMs were used, including GPT-4, GPT-3,5 LLaMA-2, as well as a number of other open models. Testing was conducted in an isolated environment to prevent real damage, on targeted websites that were checked for 15 different vulnerabilities, including SQL injection, cross-Site Scripting (XSS) and cross-Site Request Forgery (CSRF). The researchers also found that GPT-4 from OpenAI showed successful task completion in 73.3% of cases, which significantly exceeds the results of other models.

One of the explanations given in the document is that GPT-4 was better able to change its actions depending on the response received from the target website than open source models.

Scheme for using offline LLM agents for hacking websites

The study also included an analysis of the cost of using LLM agents to attack websites and comparing it with the cost of hiring a pentester. With an overall success rate of 42.7% , the average cost of hacking is $9.81 per website, which is significantly cheaper than the services of a human specialist ($80 per attempt).

The authors also expressed concerns about the future use of LLMs as autonomous hacking agents. According to the researchers, although existing vulnerabilities can be detected using automated scanners, the ability of LLM to independently and scalable hacking represents a new level of danger.

Experts called for the development of security measures and policies that promote safe research of LLM capabilities, as well as for creating conditions that allow security researchers to continue their work without fear of being penalized for identifying potentially dangerous uses of models.

Representatives of OpenAI told The Register about their serious attitude to the security of their products and their intention to strengthen security measures to prevent such abuses. The company's specialists also expressed their gratitude to the researchers for publishing the results of their work, emphasizing the importance of cooperation to ensure the security and reliability of artificial intelligence technologies.