Carding 4 Carders

Professional

- Messages

- 2,724

- Reaction score

- 1,586

- Points

- 113

An open tool destroys the world of neural networks and the reputation of companies.

A group of scientists from the University of Chicago presented a study on the Nightshade data "pollution" technique, aimed at disrupting the learning process of AI models. The tool was created to protect the works of visual artists and publishers from being used in the AI training process.

The open-source tool, called the "poison pill", modifies images unnoticed by the human eye and disrupts the learning process of the AI model. Many image generation models, with the exception of those from Adobe and Getty Images, use datasets extracted from the Internet without permission from the authors.

Many researchers rely on data stolen from the Internet, which raises ethical concerns. This approach allowed us to create powerful models, such as Stable Diffusion. Some research institutions believe that data extraction should be preserved as a fair use in AI training.

However, the Nightshade team claims that commercial and research use are two different things. Experts hope that their technology will force AI companies to license image data sets. According to the researchers, the goal of the tool is to balance the relationship between learning models and content creators.

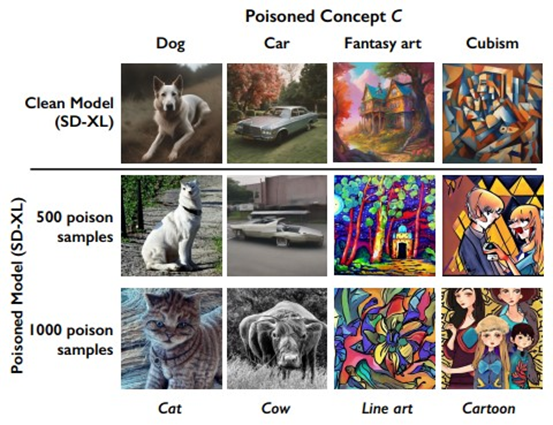

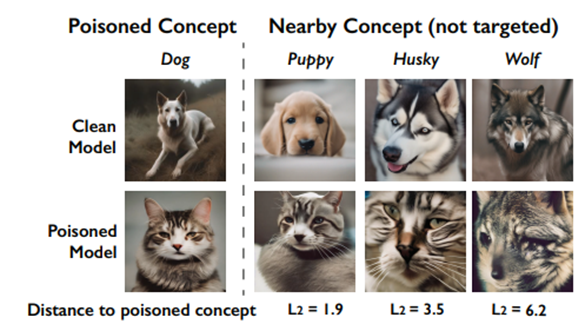

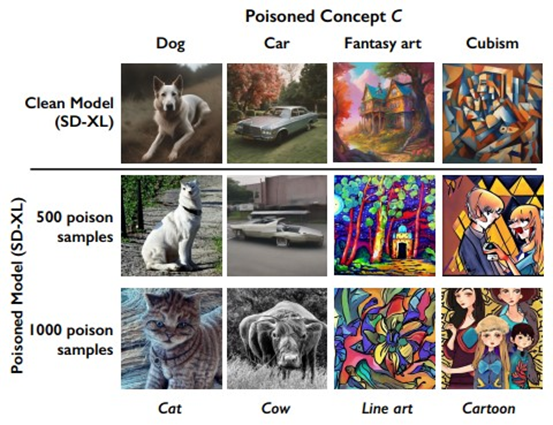

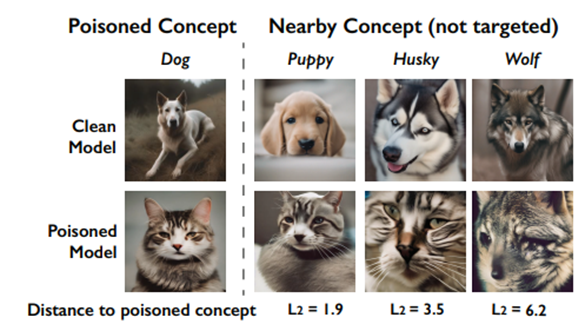

Nightshade builds on the University of Chicago's already-established Glaze tool for altering digital art in ways that confuse AI. In practice, Nightshade can change the image so that the AI sees a cat instead of a dog. The researchers conducted tests and found that after the model absorbed hundreds of "contaminated" images, it began to generate distorted images of dogs.

Examples of "clean" images on demand and poisoned after Nightshade training

Protecting against this technique of data contamination can be a challenge for AI developers. Although the researchers admit that their tool can be used with malicious intent, the main goal of the work is to return power to artists.

Example of generating images after using Nightshade

The development of technologies like Nightshade could trigger an arms race between researchers and AI companies. But for many artists, it is important that their work is not used without their permission. It is noteworthy that after the news about the creation of Nightshade, discussions began on social networks between artists and AI supporters.

It is worth noting that Nightshade will not affect existing models, since companies and researchers have long used the original "unpolluted" images. But new art styles and photos of actual events may suffer from the new technique if it becomes widely used.

A group of scientists from the University of Chicago presented a study on the Nightshade data "pollution" technique, aimed at disrupting the learning process of AI models. The tool was created to protect the works of visual artists and publishers from being used in the AI training process.

The open-source tool, called the "poison pill", modifies images unnoticed by the human eye and disrupts the learning process of the AI model. Many image generation models, with the exception of those from Adobe and Getty Images, use datasets extracted from the Internet without permission from the authors.

Many researchers rely on data stolen from the Internet, which raises ethical concerns. This approach allowed us to create powerful models, such as Stable Diffusion. Some research institutions believe that data extraction should be preserved as a fair use in AI training.

However, the Nightshade team claims that commercial and research use are two different things. Experts hope that their technology will force AI companies to license image data sets. According to the researchers, the goal of the tool is to balance the relationship between learning models and content creators.

Nightshade builds on the University of Chicago's already-established Glaze tool for altering digital art in ways that confuse AI. In practice, Nightshade can change the image so that the AI sees a cat instead of a dog. The researchers conducted tests and found that after the model absorbed hundreds of "contaminated" images, it began to generate distorted images of dogs.

Examples of "clean" images on demand and poisoned after Nightshade training

Protecting against this technique of data contamination can be a challenge for AI developers. Although the researchers admit that their tool can be used with malicious intent, the main goal of the work is to return power to artists.

Example of generating images after using Nightshade

The development of technologies like Nightshade could trigger an arms race between researchers and AI companies. But for many artists, it is important that their work is not used without their permission. It is noteworthy that after the news about the creation of Nightshade, discussions began on social networks between artists and AI supporters.

It is worth noting that Nightshade will not affect existing models, since companies and researchers have long used the original "unpolluted" images. But new art styles and photos of actual events may suffer from the new technique if it becomes widely used.