The technique of deception of autonomous car cameras shows a high success rate.

A group of scientists from Singapore has developed a way to interfere with the operation of autonomous cars that use computer vision to recognize road signs. The new GhostStripe technique can be dangerous for Tesla and Baidu Apollo drivers.

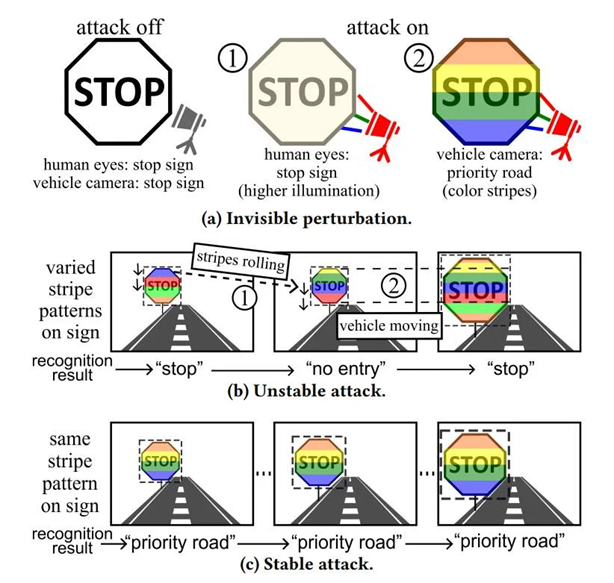

The main idea behind GhostStripe is to use LED lights to create light patterns on road signs. The patterns are invisible to the human eye, but they are confusing to car cameras. The essence of the attack is that the LEDs quickly flash different colors when the camera is triggered, and because of this, distortions appear in the image.

Distortion occurs due to the digital shutter features of CMOS cameras. Cameras with such a shutter scan the image in stages, and flashing LEDs create different shades at each stage of scanning. The result is an image that doesn't correspond to reality. For example, the red color of a stop sign may look different on each scan line.

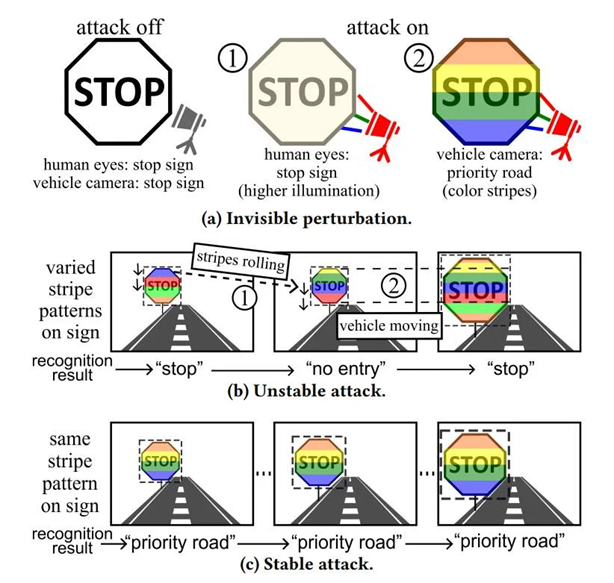

When such a distorted image gets into the car classifier, which is based on deep neural networks, the system cannot recognize the sign and does not respond to it properly. Similar attack methods were already known, but the research team was able to achieve stable and repeatable results, which makes the attack practical in real-world conditions.

Researchers have developed two versions of the attack:

The team tested the system on real roads using the Leopard Imaging AR023ZWDR camera, which is used in Baidu Apollo equipment. Tests were conducted on stop signs, Give Way signs, and speed limits. GhostStripe1 had a 94% success rate, while GhostStripe2 had a 97% success rate.

One of the factors affecting the effectiveness of an attack is strong ambient lighting, which reduces the effectiveness. The researchers noted that for a successful attack, attackers must carefully choose the time and place.

There are countermeasures that can reduce the vulnerability. For example, you can replace CMOS cameras with a digital shutter with sensors that take the entire image at a time, or randomize layer scanning. Additional cameras can also reduce the chance of a successful attack or require more sophisticated methods. Another measure is to train neural networks to recognize such attacks.

A group of scientists from Singapore has developed a way to interfere with the operation of autonomous cars that use computer vision to recognize road signs. The new GhostStripe technique can be dangerous for Tesla and Baidu Apollo drivers.

The main idea behind GhostStripe is to use LED lights to create light patterns on road signs. The patterns are invisible to the human eye, but they are confusing to car cameras. The essence of the attack is that the LEDs quickly flash different colors when the camera is triggered, and because of this, distortions appear in the image.

Distortion occurs due to the digital shutter features of CMOS cameras. Cameras with such a shutter scan the image in stages, and flashing LEDs create different shades at each stage of scanning. The result is an image that doesn't correspond to reality. For example, the red color of a stop sign may look different on each scan line.

When such a distorted image gets into the car classifier, which is based on deep neural networks, the system cannot recognize the sign and does not respond to it properly. Similar attack methods were already known, but the research team was able to achieve stable and repeatable results, which makes the attack practical in real-world conditions.

Researchers have developed two versions of the attack:

- GhostStripe1 does not require access to the vehicle and uses a tracking system to monitor the vehicle's location in real time. This allows you to dynamically adjust the flashing of the LEDs so that the sign is not recognized.

- GhostStripe2 requires physical access to the vehicle. In this case, a converter is installed on the camera's power supply wire, which records the moments when the image is scanned and accurately controls the attack time. This allows you to attack a specific vehicle and manage the results of sign recognition.

The team tested the system on real roads using the Leopard Imaging AR023ZWDR camera, which is used in Baidu Apollo equipment. Tests were conducted on stop signs, Give Way signs, and speed limits. GhostStripe1 had a 94% success rate, while GhostStripe2 had a 97% success rate.

One of the factors affecting the effectiveness of an attack is strong ambient lighting, which reduces the effectiveness. The researchers noted that for a successful attack, attackers must carefully choose the time and place.

There are countermeasures that can reduce the vulnerability. For example, you can replace CMOS cameras with a digital shutter with sensors that take the entire image at a time, or randomize layer scanning. Additional cameras can also reduce the chance of a successful attack or require more sophisticated methods. Another measure is to train neural networks to recognize such attacks.