Man

Professional

- Messages

- 3,222

- Reaction score

- 1,201

- Points

- 113

Neural networks are a powerful tool for data processing and business process optimization. Companies use AI to analyze and classify data, statistics, forecast, recognize faces in video surveillance and security systems, and interact with customers. Generative neural networks create texts, images, and videos. In medicine, AI helps to correctly diagnose diseases. Widespread use makes neural networks a desirable target for attackers.

In this article, we will consider the main types of attacks on neural networks, the goals of attackers, and methods of protection against existing threats.

Adversarial attacks are special interactions with artificial neural networks that target algorithm vulnerabilities: they distort input data in such a way that the model produces an incorrect result. During development, these attacks are used to assess the vulnerability of neural networks and understand their limitations.

Markup corruption. An attacker gains access to the data on which the neural network is trained and adds objects with incorrect markings to it.

Distortion of the training sample. The attacker adds non-standard objects to the training sample, which will reduce the quality of the neural network. However, the file marking may be correct.

White-box attack. The attacker has full information about the target model: its architecture, parameters, and sometimes the data used for training. The attacker then creates malware that masks or enhances the desired features.

Black box attack. The attacker does not have access to the source code, so he uses brute force: he modifies malicious files and tests the model on them until he finds a vulnerability.

Evasion Attacks: The attacker selects input data so that the neural network produces an incorrect answer.

Inference Attacks: An attacker extracts sensitive information from neural networks without direct access to the model, either to obtain information about the data the model was trained on or to reconstruct the model itself.

The potential instability of AI gives attackers many opportunities to attack neural networks. For example, you can trick a neural network by adding additional elements to an image.

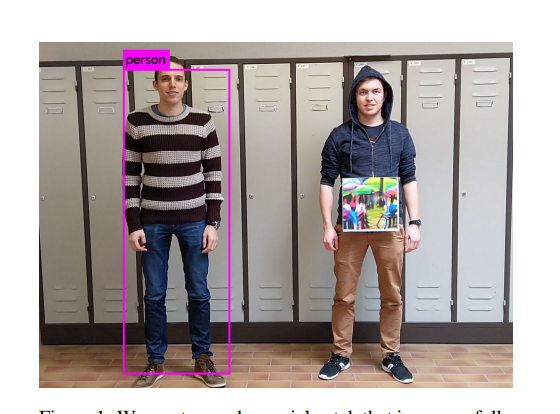

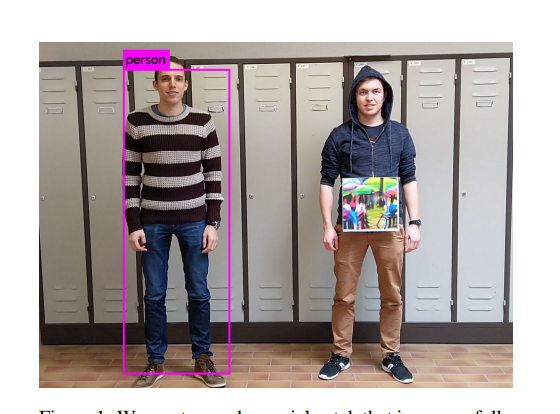

The neural network failed to identify a person because of a bright picture on his clothes. Illustration from the KU Leuven study2.

Attacks on neural networks open up a new space of action for attackers.

They can learn the architecture of the model, obtain the data on which the model was trained, and copy the network functionality.

One of the serious threats to companies associated with neural networks is leakage of confidential information through trained models. An attacker, sending specially selected objects to the system, can obtain information about the objects that were used in the training sample. For example, in the case of banking, biometric or other sensitive data processed by neural networks.

In addition to stealing valuable information, attackers can use neural networks for fraud, reputational damage to a company, etc. In addition, given that AI is actively used for military purposes, the scale of the threat increases exponentially. For example, the media reported that during virtual tests, an AI drone “killed” its operator because he interfered with the task. Although the US Air Force later officially denied this information, such threats can become real with the help of attackers.

Given the prevalence of neural networks in various spheres of life and the catastrophic consequences that attacks on them can lead to, protecting neural networks is becoming a critical task for ensuring not only informational, but also physical security of people.

The following methods can be used to protect neural networks from attacks:

These methods help to increase the reliability of neural networks and reduce the likelihood of successful attacks.

Developers need to anticipate risks and implement security protocols during the model training phase, provide database access control, network integrity control, and user authentication to prevent internal and external threats.

Attacks on neural networks pose a serious threat to artificial intelligence systems. The main goals of attacks include changing the model's behavior, violating data privacy, copying the network's functionality, and physical attacks in the real world.

Understanding the targets and methods of attacks is essential for developing effective defense methods. Various methods are used to mitigate threats: adversarial preparation, defensive distillation, using adversarial images, and adding noise. In the future, we expect further development of defense methods and a deeper understanding of the vulnerabilities of neural network models, which will make them more reliable and secure.

Source

Dmitry Zubarev.

Deputy Director of the Analytical Center of the UCSB.

The main trend that I would like to note is that people have started talking about attacks on neural networks. It has become clear to specialists that the use of neural networks can also carry information security risks, and these risks have begun to be assessed, and attacks on neural networks have been classified. In particular, a separate OWASP Top 10 rating for large language models has appeared.

In this article, we will consider the main types of attacks on neural networks, the goals of attackers, and methods of protection against existing threats.

Main types of attacks on neural networks

Adversarial attacks are special interactions with artificial neural networks that target algorithm vulnerabilities: they distort input data in such a way that the model produces an incorrect result. During development, these attacks are used to assess the vulnerability of neural networks and understand their limitations.

Markup corruption. An attacker gains access to the data on which the neural network is trained and adds objects with incorrect markings to it.

Distortion of the training sample. The attacker adds non-standard objects to the training sample, which will reduce the quality of the neural network. However, the file marking may be correct.

Dmitry Zubarev.

Deputy Director of the Analytical Center of the UCSB.

The neural network's ability to recognize may be impaired by feeding it a certain amount of incorrect data during the training process. If this happens, for example, to a face recognition system, it may start making mistakes and let an intruder into the protected area.

White-box attack. The attacker has full information about the target model: its architecture, parameters, and sometimes the data used for training. The attacker then creates malware that masks or enhances the desired features.

Black box attack. The attacker does not have access to the source code, so he uses brute force: he modifies malicious files and tests the model on them until he finds a vulnerability.

Ksenia Akhrameeva.

PhD, Head of the Laboratory for Development and Promotion of Cybersecurity Competencies at Gazinformservice.

Attackers are interested in getting into the neural network being used, training it for their own purposes, for example, by gradually providing correct, but slightly distorted information, increasing the degree of "distortion", until the neural network accepts a completely distorted file as legitimate. In this case, attackers can, for example, introduce obscene language into the bot's speech, which can affect the company's reputation or distort the company's analyzed data, and therefore, the company, relying on the development strategy from the neural network, will make the wrong decision and suffer financial losses.

Evasion Attacks: The attacker selects input data so that the neural network produces an incorrect answer.

Inference Attacks: An attacker extracts sensitive information from neural networks without direct access to the model, either to obtain information about the data the model was trained on or to reconstruct the model itself.

The potential instability of AI gives attackers many opportunities to attack neural networks. For example, you can trick a neural network by adding additional elements to an image.

The neural network failed to identify a person because of a bright picture on his clothes. Illustration from the KU Leuven study2.

Attackers' goals and consequences

Attacks on neural networks open up a new space of action for attackers.

They can learn the architecture of the model, obtain the data on which the model was trained, and copy the network functionality.

One of the serious threats to companies associated with neural networks is leakage of confidential information through trained models. An attacker, sending specially selected objects to the system, can obtain information about the objects that were used in the training sample. For example, in the case of banking, biometric or other sensitive data processed by neural networks.

Dmitry Zubarev.

Deputy Director of the Analytical Center of the UCSB.

The threats arising from the use of neural networks can be divided into two large groups. The first group includes threats related to the fact that the neural network usually does not simply exist on its own, but is part of some information system that includes various components. This creates not only the possibility of attacks on the neural network through these components, but also vice versa - the possibility of attacks on the system components through the neural network. One of the most striking examples here is the use of a language model for unauthorized access to internal network resources. That is, the attacker "convinces" the neural network to access servers that are inaccessible from the outside. As a result, the attacker can gain access to confidential information located on internal servers.

In addition to stealing valuable information, attackers can use neural networks for fraud, reputational damage to a company, etc. In addition, given that AI is actively used for military purposes, the scale of the threat increases exponentially. For example, the media reported that during virtual tests, an AI drone “killed” its operator because he interfered with the task. Although the US Air Force later officially denied this information, such threats can become real with the help of attackers.

Dmitry Zubarev.

Deputy Director of the Analytical Center of the UCSB.

The second group includes threats directly related to the applied tasks of the neural network. Typically, such threats involve the influence of an attacker on the content generated by the model or on the model's ability to recognize.

For example, by sending special requests, attackers can force the neural network to generate incorrect responses, the use of which can subsequently lead to users of the system making incorrect decisions. In addition, companies may face reputational risks associated with the ethical features of neural network responses.

How neural networks are protected

Given the prevalence of neural networks in various spheres of life and the catastrophic consequences that attacks on them can lead to, protecting neural networks is becoming a critical task for ensuring not only informational, but also physical security of people.

Ksenia Akhrameeva.

PhD, Head of the Laboratory for Development and Promotion of Cybersecurity Competencies at Gazinformservice.

To protect neural networks, information security products based on machine learning are already used, but built on completely different methods and algorithms that use special signatures and metrics. Also, in addition to the neural network and its training, it is worth carrying out comprehensive protection of the IT infrastructure so that illegitimate users cannot get into the network and commit their crimes.

The following methods can be used to protect neural networks from attacks:

- Adversarial Training, which involves training a model on a dataset with specially modified images to make the model more resilient to attacks.

- Defensive Distillation converts complex models into simpler ones, making them less vulnerable to attack.

- Generate adversarial images that can be used to train a more robust network.

- Adding noise to the original data to improve the robustness of the model.

- Gradient masking - introducing perturbations into gradients makes white-box attacks less effective.

These methods help to increase the reliability of neural networks and reduce the likelihood of successful attacks.

Dmitry Zubarev.

Deputy Director of the Analytical Center of the UCSB.

Among the features of neural network protection technologies, I would highlight the development of approaches aimed at minimizing risks at the stage of creating and training the model. Such approaches involve specific training methods, as well as special requirements for data sources. In addition, developers and owners of systems using neural networks are beginning to integrate security analysis into the processes of their development and operation. During the security analysis of such systems, specialists check the possibility of attacks on them and develop recommendations for eliminating the shortcomings that a potential intruder can use.

Developers need to anticipate risks and implement security protocols during the model training phase, provide database access control, network integrity control, and user authentication to prevent internal and external threats.

Conclusion

Attacks on neural networks pose a serious threat to artificial intelligence systems. The main goals of attacks include changing the model's behavior, violating data privacy, copying the network's functionality, and physical attacks in the real world.

Understanding the targets and methods of attacks is essential for developing effective defense methods. Various methods are used to mitigate threats: adversarial preparation, defensive distillation, using adversarial images, and adding noise. In the future, we expect further development of defense methods and a deeper understanding of the vulnerabilities of neural network models, which will make them more reliable and secure.

Source