Teacher

Professional

- Messages

- 2,669

- Reaction score

- 819

- Points

- 113

Recorded Future specialists explained how AI will be used in new-level attacks.

A new report from Recorded Future, an information technology security company, describes how large language models (LLMs) can be used to create self-improving malware that can circumvent YARA rules.

Experiments have shown that generative AI can effectively modify the source code of malware to evade detection based on YARA rules, thereby reducing the likelihood of detection. This approach is already being explored by cybercriminals to generate malicious code fragments, create phishing emails, and conduct intelligence on potential targets.

As an example, the company asked LLM to modify the source code of the well-known STEELHOOK malware in order to bypass detection without losing functionality and syntax errors. The modified malware obtained in this way was able to avoid detection using simple YARA rules.

However, there are limitations to this approach of rules related to the amount of text that the model can process at a time, which makes it difficult to work with large code bases. However, according to Recorded Future, cybercriminals can circumvent this restriction by uploading files to LLM tools.

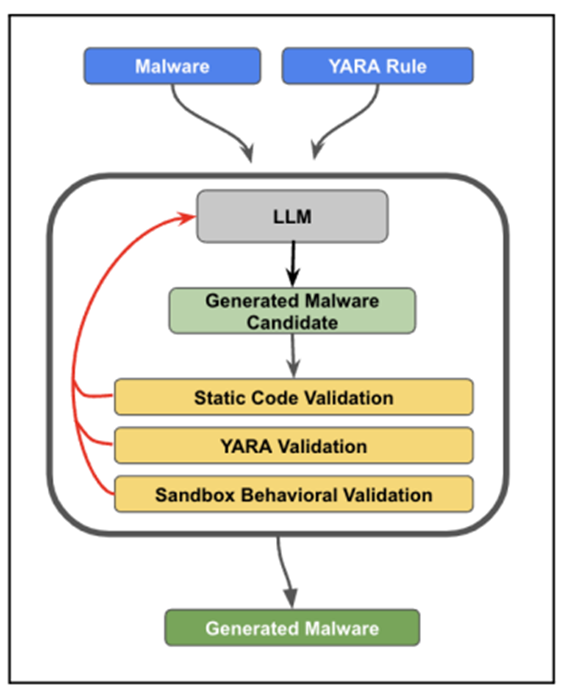

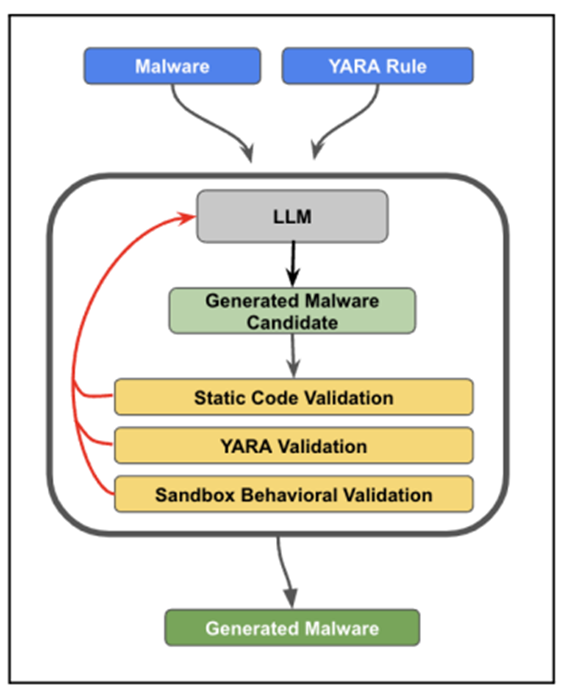

Malware creation chain usingLLM to bypassYARA

The study also shows that in 2024, the most likely malicious use of AI will be associated with deepfakes and influence operations:

In addition to modifying malware, AI can be used to create deepfakes of high-ranking officials and conduct influence operations by imitating legitimate websites. It is expected that generative AI will accelerate the ability of attackers to conduct reconnaissance of critical infrastructure objects and obtain information that can be used in subsequent attacks.

Organizations are encouraged to prepare for such threats by considering the voices and looks of their supervisors, websites and branding, and public images as part of their attack surface. You should also expect a more sophisticated use of AI to create malware that evades detection.

A new report from Recorded Future, an information technology security company, describes how large language models (LLMs) can be used to create self-improving malware that can circumvent YARA rules.

Experiments have shown that generative AI can effectively modify the source code of malware to evade detection based on YARA rules, thereby reducing the likelihood of detection. This approach is already being explored by cybercriminals to generate malicious code fragments, create phishing emails, and conduct intelligence on potential targets.

As an example, the company asked LLM to modify the source code of the well-known STEELHOOK malware in order to bypass detection without losing functionality and syntax errors. The modified malware obtained in this way was able to avoid detection using simple YARA rules.

However, there are limitations to this approach of rules related to the amount of text that the model can process at a time, which makes it difficult to work with large code bases. However, according to Recorded Future, cybercriminals can circumvent this restriction by uploading files to LLM tools.

Malware creation chain usingLLM to bypassYARA

The study also shows that in 2024, the most likely malicious use of AI will be associated with deepfakes and influence operations:

- Deepfakes created using open source tools can be used to simulate the personalities of managers, and AI-generated audio and video can enhance social engineering campaigns.

- The cost of creating content for influence operations will be significantly reduced, making it easier to clone websites or create fake media. AI can also help malware developers bypass detection, and help attackers conduct intelligence activities, such as identifying vulnerable industrial systems or searching for sensitive objects.

In addition to modifying malware, AI can be used to create deepfakes of high-ranking officials and conduct influence operations by imitating legitimate websites. It is expected that generative AI will accelerate the ability of attackers to conduct reconnaissance of critical infrastructure objects and obtain information that can be used in subsequent attacks.

Organizations are encouraged to prepare for such threats by considering the voices and looks of their supervisors, websites and branding, and public images as part of their attack surface. You should also expect a more sophisticated use of AI to create malware that evades detection.