Tomcat

Professional

- Messages

- 2,687

- Reaction score

- 1,036

- Points

- 113

Neural networks not only recognize texts, images and speech, but also help diagnose diseases and search for minerals. How does this happen?

Three important facts about artificial intelligence

Machine learning has become a part of our lives. These are not some new technologies and flying machines that we have not seen yet. We participate in machine learning every day: we are either the object of this training, or we supply data for it.There are no “magic black boxes”. There is no artificial intelligence into which you throw something, and it calculates everything for you. The most important thing is the quality data on which the training takes place. All architectures and algorithms are known, and the secret of some cool new application is always in the data.

Machine learning is being developed primarily by the open community. We are for open source - just like Google and other developers of everything that is open and good.

From heuristics to learning

Small educational program: AI is a large industry of which machine learning is a part. It has many algorithms, the most interesting are neural networks. Deep learning is a specific type of neural network that we do:Why are old algorithms not working and why is machine learning needed? Yes, doctors recognize cancer better than neural networks - but they do it most often at the fourth stage, when irreversible changes are already taking place in a person. And in order to recognize the disease at first, algorithms are needed. Previously, oil itself poured out of the ground, but this will not happen anymore, natural resources are becoming more and more difficult to extract.

All our previous knowledge is based on heuristic algorithms. For example, if a person was sick with something and he has a certain family predisposition, then we understand that the neoplasm we have discovered is most likely this and that. We will send the person to the scanner, we will start checking. But if we do not have this knowledge about a person, then we will not do anything with him. This is a heuristic.

Most of the existing programs for professional experts in various industries are now built on heuristics. They are trying to switch to machine learning, but it is difficult because it takes data.

For example, Pornhub has excellent neural network algorithms, but there are also heuristics. The site contains sections: "Popular" - by the number of views, "Best" - by the number of likes, and there is "Hottest". How to define its heuristic? It is not calculated by the number of views or popular hashtags. These are the videos that are the last to watch before leaving the site - they cause the most emotions in users.

When and why did neural networks appear? For the first time they wrote about them in 1959, but the number of publications began to increase sharply only since 2009. For 50 years, nothing happened: there was no possibility to carry out calculations, there were no modern graphics accelerators. To train a neural network to do something, you need a lot of computing power and strong hardware. But now every day there are 50 publications about the achievements of neural networks, and there is no turning back.

Most importantly, neural networks are not magic. When people find out that I am engaged in data science, they begin to offer me startup ideas: take from somewhere, for example, Facebook, all the data, throw it into a neural network and predict, relatively speaking, “everything”. But it doesn't work that way. There is always a specific type of data and a clear statement of the problem:

As you can see, there is no "recognition" in the list, because that is how it is called in the language of people, and mathematically it can be formulated in different ways. And therefore, complex tasks are always broken down into simpler sub-tasks.

Here is a digitized image of the handwritten number 9, 28 by 28 pixels:

The first layer of the neural network is the input that "sees" 784 pixels colored in different shades of gray. The last one is the output: several categories, one of which we ask to include what was sent to the input. And between them are hidden layers:

These hidden layers are some function that we do not set with any heuristic, it learns itself to deduce a mathematical sequence that, with a certain probability, will classify the "input" pixels to a certain class.

How neural networks work with images

Classification. You can teach a neural network to classify images, for example, to recognize dog breeds:

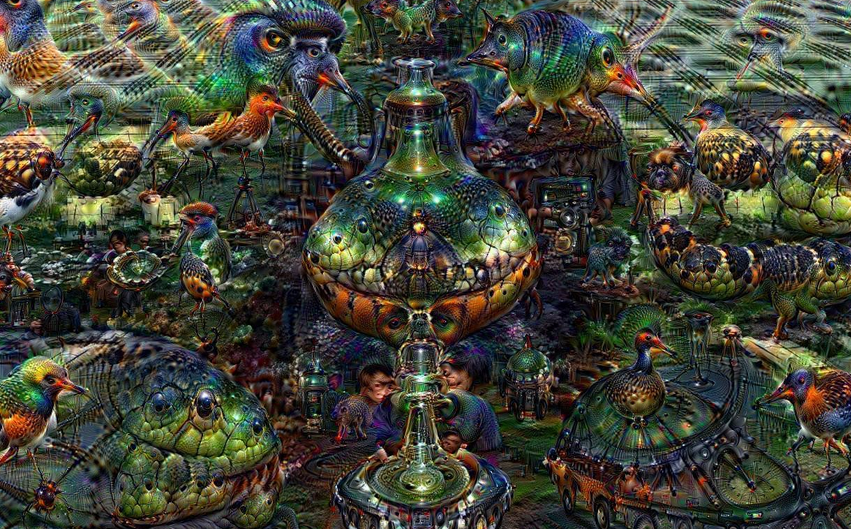

But to train it, it will take millions of pictures - and this should be the type of data that you will actually use later. Because if you trained a neural network to look for dogs, and you are showing cupcakes, it will still look for dogs, and you get something like this:

Detection. This is a different task: in the image you need to find an object belonging to a certain class. For example, we upload a snapshot of the coast to the neural network and ask to find people and kites:

A similar algorithm is now undergoing beta testing in the Lisa Alert search unit. During the search, the members of the squads take many pictures with the help of drones, then they look at them - and sometimes this is how they find lost people. To reduce the time spent on reviewing all images, the algorithm filters out those images that do not contain information relevant to searches. But no neural network will give one hundred percent accuracy, so the images selected by the algorithm are validated by people.

Segmentation (single-class and multi-class) is used, for example, for self-driving cars. The neural network distributes objects into classes: here are cars, here is a sidewalk, here is a building, here are people, all objects have clear boundaries:

Generation. Generating networks have emptiness at the input, some class of objects at the output, and hidden layers are trying to learn how to turn emptiness into something definite. For example, here are two faces - both were generated by a neural network:

The neural network looks at millions of photographs of people on the Internet and, through multiple iterations, learns to understand that the face should have a nose, eyes, that the head should be round, etc.

And if we can generate an image, then we can make it move in the same way as a certain person - that is, generate a video. An example is a recent viral video in which Obama says Trump is an idiot. Obama never said that, just neural network taught Match (from the English match -. «Match, match, match." - . Note ) Obama, and when the other person is talking, the camera was broadcasting his facial expressions on the face of former US president. Another example is Ctrl Shift Face that makes great deepfakes to the stars. So far, neural networks do not always work perfectly, but every year they will do it better and better, and soon it will be impossible to distinguish a real person from a person "smeared" with a network on video. And no Face ID will insure us against fraud anymore.

How neural networks work with texts

Texts for networks do not make sense, for them they are just "vectors" on which you can perform various mathematical operations, for example: "The king minus the man plus the woman is equal to the queen":

But due to the fact that neural networks learn from texts created by people, curiosities arise. For example: "Doctor minus man plus woman equals nurse." In the neural network, female doctors do not exist.

Machine translate. Previously, many people used a translator whose work was based on heuristics: these words mean this, they can be translated and inflected only in this way and arranged in that order. He could not deviate from these rules, and often the whole thing turned out to be nonsense:

Today, neural networks have been added to the work of Google Translate, and the texts translated by it already look much more literary.

Generating text. Six months ago, we made a neural network that can be used to set a topic, a few keywords, and it will write a reflection essay itself. Works great, but doesn't check facts or think about the ethics of what is written:

Essay on the dangers of waste recycling

The authors did not release the code in open access, did not show what they used to train the network, justifying this by the fact that the world is not ready for this technology, that it will be used to harm.

Recognition and generation of speech. Everything is the same as with image recognition: there is sound, you need to digitize the signal:

This is how Alice and Siri work. When you write a text in Google Translate, it translates it, forms a sound wave from letters and reproduces it, that is, generates speech.

Reinforcement learning

The Arkanoid game is the simplest example of reinforcement learning:

There is an agent - something that you act on, that can change its behavior - in this case, it is the horizontal "stick" at the bottom. There is an environment that is described by different modules - this is everything around the "stick". There is a reward: when the net drops the ball, we say that it loses its reward.

When a neural network knocks out glasses, we tell it that it is healthy and it is working well. And then the network begins to invent actions that will lead it to victory, maximize the benefits. First he throws the ball and just stands there. We say bad. She: "Okay, I'll throw it, move one pixel." - "Badly". - "Kinu, move two, left, right, I will twitch randomly." The neural network training process is very long and expensive.

Another example of reinforcement learning is go. In May 2014, people said that it would take a long time for the computer to understand how to play Go. But the next year, the neural network beat the European champion. In March 2016, AlphaGo defeated the world champion of the highest dan, and the next version beat the previous one with a devastating score of 100: 0, although it made absolutely unpredictable steps. She had no restrictions other than playing by the rules:

Why teach a computer to play games for big money, invest in eSports? The fact is that learning the movement and interaction of robots in the environment is even more expensive. If your algorithm goes wrong and crashes a multi-million dollar drone, it is very disappointing. And to train in public, but in Dota, God himself ordered.

Open source

How and by whom are machine learning applications implemented? Bold statements on the Internet that some company wrote another application that “recognized everything” are not true. There are market leaders who develop tools and make them publicly available so that all people can write code, propose changes, move the industry. There are the "good guys" who also share some of the code. But there are also "bad guys" whom it is better not to get involved with, because they do not develop their own algorithms, but use what the "good guys" wrote, make their own "Frankensteins" out of their developments and try to sell.Examples of data science applications in the oil industry

Search for new deposits. To understand if there is oil in the ground, experts make a series of explosions and record a signal so that they can then watch how the vibrations travel through the ground. But the surface wave distorts the general picture, clogs the signal from the bowels, so the result must be "cleaned up". Seismic specialists do this in special programs, and they cannot use the same filter or set of filters every time: to find the desired combination, they select a new combination of filters each time. Using the example of their work, we can teach a neural network to do the same:True, it turns out that the network removes not only surface noise, but also the useful signal. Therefore, we add a new condition: we ask you to clean only that part of the signal with which the seismicists work - this is called a "neural network with attention."

Description of the core column by lithology type. This is a segmentation task. There are photographs of the core - rocks pulled out of the well. A specialist manually needs to disassemble which layers are there. A person spends weeks and months on this, and a trained neural network takes up to an hour. The more we teach her, the better she works:

"Better Than Human"

Experts ask how the work of a neural network can be compared with human experience: "Yes, Ivan Petrovich has been with us since 1964, but he smelled this core!" Of course, but he did the same thing as the grid: he took a core, took a textbook, watched other people do it, and tried to deduce a pattern from it. Only the neural network works much faster and Ivan Petrovich's life experience goes through 500 times a day. However, people still do not believe in technology, so we have to break all tasks into small stages so that an expert can validate each of them and believe that the neural network is working.All statements that some kind of neural network works "better than a human" are most often not based on anything, because there will always be someone who will be "stupider" than a neural network. You tell me: "Recognize oil." And I: "Well, here somewhere." Conclusion: "Yeah, it didn't work out, so our system works better than you." In fact, in order to assess the efficiency of a neural network, there must be a comparison with a whole group of experts, the main people in the industry.

Accuracy claims are equally questionable. If you take ten people, one of whom has lung cancer, and say that they are all healthy, then we will predict the situation with an accuracy of 90%. We made a mistake in one out of ten, everything is fair, no one deceived anyone. But the result we get doesn't get us anywhere. Any news about revolutionary developments is not true if there is no open source code or a description of how they are made.

The data must be of good quality. There are no situations when you throw uncleaned data into a neural network, it is not known how the collected data is and you get something useful. What does bad data mean? To recognize an oncological disease, you need to take many high-resolution CT scans and assemble a 3D cube of organs from them. Then, in one of the sections, the doctor will be able to find a picture of suspected cancer - a dense mass that should not be there. We asked specialists to mark many such images for us in order to teach the neural network to isolate cancer. The problem is that one doctor thinks that cancer is in one place, another doctor thinks that there are two cancers, the third doctor thinks something else. It is impossible to make a neural network out of this, because they are all different tissues, and if you train a neural network on such data, then it will see cancer everywhere in general.

Neural network problems

With a dataset (data set - "data set". - Approx. ). One day, a Chinese violation recognition system issued a penalty for crossing the wrong place to a woman who was actually just an advertisement on a bus crossing a pedestrian crossing. This means that the wrong dataset was used to train the neural network. Objects in context were needed in order for the neural network to learn to distinguish real women from advertising images.Another example: there was a lung cancer detection competition. One community released a dataset with a thousand images and labeled the cancer on them according to the views of three different experts (but only when their opinions were the same). It was possible to learn on such a dataset. But another company decided to get publicity and released the news that it used several hundred thousand X-rays in its work. But it turned out that there were only 20% of patients there. But they are important for us, because if the neural network learns without them, it will not recognize the disease. Moreover, this 20% included several categories of diseases with different subtypes of diseases. And it turned out that since this is not a 3D picture, but a two-dimensional image, nothing can be done with such a dataset.

It is important to include real information in the dataset. Otherwise, people who are glued to buses will have to be fined.

With implementation. Neural networks do not know what to offer in the absence of information and when to stop. For example, if you have got yourself a new mail account and the neural network still does not know anything about you, then in the mail you will first have an advertisement that has nothing to do with you personally. And if you searched the Internet for a sofa and bought it, you will still be advertising sofas for a long time, because the neural network does not know that you have already made a purchase. The chatbot, which fell in love with Hitler, just watched what people were doing and tried to mimic. Keep in mind: you produce content every day and it can be used against you.

With reality. In Florence, there is an artist who puts funny stickers on road signs to diversify the everyday life of people. But such signs in the training sample for self-driving cars, most likely, will not be. And if you release the car into such a world, it will simply run over a few pedestrians and stop:

Thus, in order for neural networks to work cool, you need not to tell big news about them, but to learn mathematics and use what is in the public domain.