The attack makes a loss of $46,000 daily.

Sysdig discovered a new attack scheme in which stolen credentials for cloud services are used to access the services of cloud LLM models in order to sell access to other cybercriminals. The detected attack was directed at the Claude (v2/v3) model from Anthropic and was called LLMjacking.

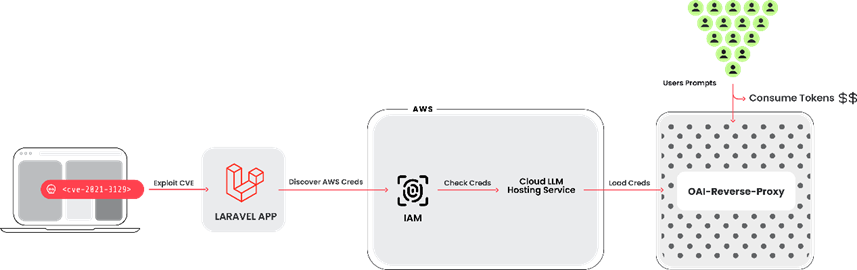

To perform the attack, the attacker hacked a system with a vulnerable version of the Laravel framework (RCE vulnerability CVE-2021-3129 with CVSS rating: 9.8), and then took possession of Amazon Web Services (AWS) credentials to gain access to LLM services.

Among the tools used is an open-source Python script that verifies keys for various services from Anthropic, AWS Bedrock, Google Cloud Vertex AI, Mistral, and OpenAI.

The attacker used the API to verify their credentials without attracting attention. For example, sending a request with the "max_tokens_to_sample" parameter set to -1 does not cause an access error, but returns a "ValidationException" exception, which confirms that the victim's account has access to the service. It is noted that no requests to LLM were executed during the check. It was enough to set what rights the accounts have.

In addition, the cybercriminal used the oai-reverse-proxy tool, which acts as a reverse proxy for the LLM API model, which allows you to sell access to compromised accounts without disclosing your original credentials.

LLMjacking Attack Chain

Sysdig explained that this deviation from traditional attacks aimed at injecting commands and "poisoning" models allows hackers to monetize access to LLM, while the owner of a cloud account pays bills without knowing it. According to Sysdig, such an attack can lead to costs for LLM services of more than $46,000 per day for the victim.

Using LLM can be expensive, depending on the model and the number of tokens that are fed into it. By maximizing quota limits, attackers can also prevent a compromised organization from using models, disrupting business operations.

Organizations are encouraged to enable detailed logging and monitor cloud logs for suspicious or unauthorized activity, and ensure that vulnerabilities are effectively managed to prevent initial access.

Sysdig discovered a new attack scheme in which stolen credentials for cloud services are used to access the services of cloud LLM models in order to sell access to other cybercriminals. The detected attack was directed at the Claude (v2/v3) model from Anthropic and was called LLMjacking.

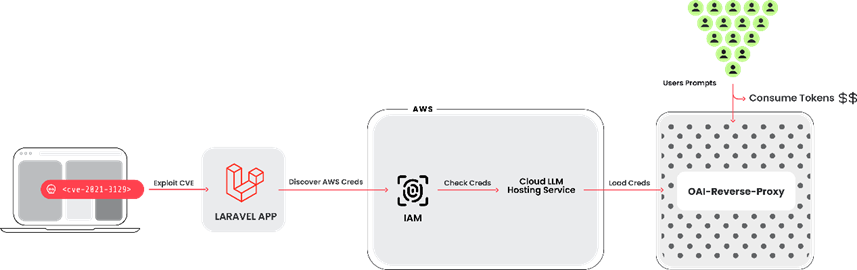

To perform the attack, the attacker hacked a system with a vulnerable version of the Laravel framework (RCE vulnerability CVE-2021-3129 with CVSS rating: 9.8), and then took possession of Amazon Web Services (AWS) credentials to gain access to LLM services.

Among the tools used is an open-source Python script that verifies keys for various services from Anthropic, AWS Bedrock, Google Cloud Vertex AI, Mistral, and OpenAI.

The attacker used the API to verify their credentials without attracting attention. For example, sending a request with the "max_tokens_to_sample" parameter set to -1 does not cause an access error, but returns a "ValidationException" exception, which confirms that the victim's account has access to the service. It is noted that no requests to LLM were executed during the check. It was enough to set what rights the accounts have.

In addition, the cybercriminal used the oai-reverse-proxy tool, which acts as a reverse proxy for the LLM API model, which allows you to sell access to compromised accounts without disclosing your original credentials.

LLMjacking Attack Chain

Sysdig explained that this deviation from traditional attacks aimed at injecting commands and "poisoning" models allows hackers to monetize access to LLM, while the owner of a cloud account pays bills without knowing it. According to Sysdig, such an attack can lead to costs for LLM services of more than $46,000 per day for the victim.

Using LLM can be expensive, depending on the model and the number of tokens that are fed into it. By maximizing quota limits, attackers can also prevent a compromised organization from using models, disrupting business operations.

Organizations are encouraged to enable detailed logging and monitor cloud logs for suspicious or unauthorized activity, and ensure that vulnerabilities are effectively managed to prevent initial access.