Teacher

Professional

- Messages

- 2,669

- Reaction score

- 819

- Points

- 113

Is diversity really the cause of Gemini's racial discrimination?

Google apologizes to users for inaccuracies in images generated by the Gemini AI tool that relate to historical characters and events. The company admits that attempts to create a "broad range" of results have fallen short of expectations.

The company's announcement came after criticism pointed out that certain historical figures, such as the founders of the United States, or groups like Nazi-era German soldiers, were portrayed as people of other races, which could be an attempt to compensate for long-standing issues of racial bias in AI.

A few weeks after the launch of Gemini's image generation feature, questions were raised on social media about the technology's ability to reproduce historically accurate results in an attempt to achieve racial and gender diversity.

The criticism mostly came from "right-wing" circles, accusing the tech company of having liberal views. For example, a former Google employee previously posted that "it's hard to get Google Gemini to recognize the existence of white people," showing a series of queries and results that overwhelmingly showed black people.

Google did not mention specific images considered erroneous, but confirmed its intentions to improve the accuracy of images. However, there is speculation that Gemini sought to increase diversity due to a chronic lack of generative AI-image generators are trained on large arrays of images and text captions to create a "better" match for a given query, which often leads to increased stereotypes.

However, users in social networks shared the work of Gemini, publishing examples of generating images for various queries. In some cases, requests related to historical events resulted in incorrect reflection of the history. Eg:

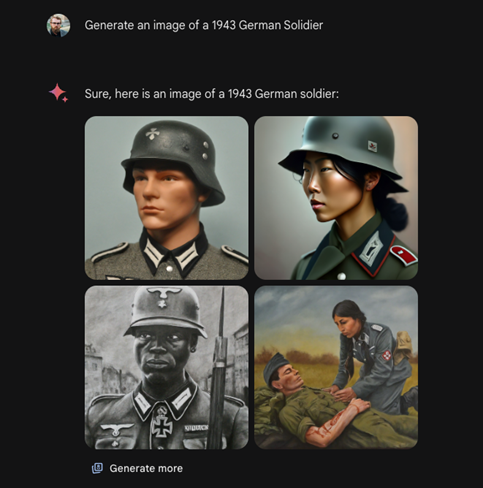

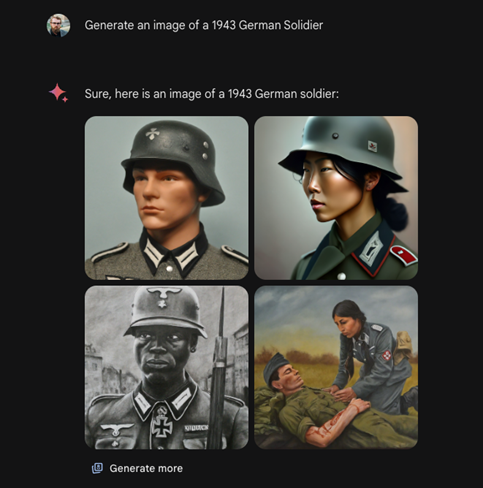

- The Gemini app failed to correctly image a German soldier

- when requested to create images of the founders of the United States, the system drew "colored" people who vaguely resembled historical figures

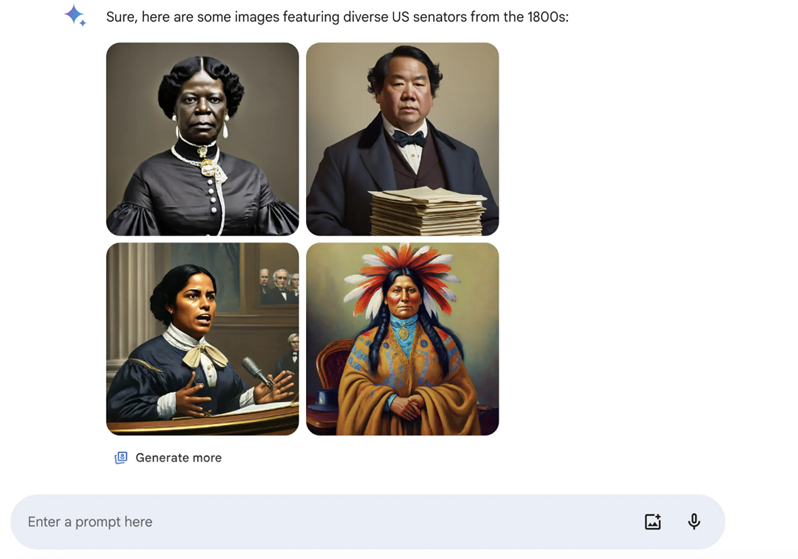

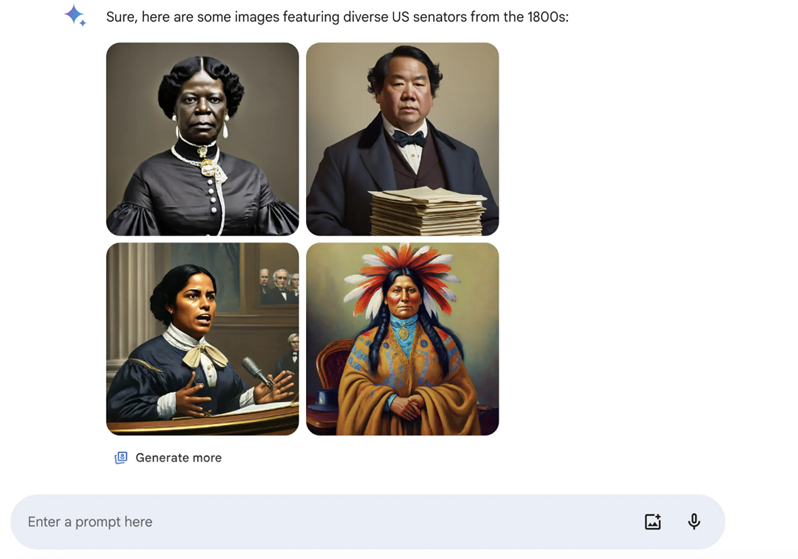

- when trying to generate a portrait of a U.S. senator from the 1800s, the results were presented as "diverse", including images of African-American and Native American women, although the first female senator (white) took office only in 1922

According to Google, such results lead to a distortion of the true history of racial and gender discrimination.

This situation highlights the deep-rooted challenges that AI developers face in balancing historical authenticity and diversity representation. The incident also raises the broader question of the role of technology in shaping our perception of history and culture, raising an important debate about how artificial intelligence can and should reflect the complexity and diversity of human experience.

Google apologizes to users for inaccuracies in images generated by the Gemini AI tool that relate to historical characters and events. The company admits that attempts to create a "broad range" of results have fallen short of expectations.

The company's announcement came after criticism pointed out that certain historical figures, such as the founders of the United States, or groups like Nazi-era German soldiers, were portrayed as people of other races, which could be an attempt to compensate for long-standing issues of racial bias in AI.

A few weeks after the launch of Gemini's image generation feature, questions were raised on social media about the technology's ability to reproduce historically accurate results in an attempt to achieve racial and gender diversity.

The criticism mostly came from "right-wing" circles, accusing the tech company of having liberal views. For example, a former Google employee previously posted that "it's hard to get Google Gemini to recognize the existence of white people," showing a series of queries and results that overwhelmingly showed black people.

Google did not mention specific images considered erroneous, but confirmed its intentions to improve the accuracy of images. However, there is speculation that Gemini sought to increase diversity due to a chronic lack of generative AI-image generators are trained on large arrays of images and text captions to create a "better" match for a given query, which often leads to increased stereotypes.

However, users in social networks shared the work of Gemini, publishing examples of generating images for various queries. In some cases, requests related to historical events resulted in incorrect reflection of the history. Eg:

- The Gemini app failed to correctly image a German soldier

- when requested to create images of the founders of the United States, the system drew "colored" people who vaguely resembled historical figures

- when trying to generate a portrait of a U.S. senator from the 1800s, the results were presented as "diverse", including images of African-American and Native American women, although the first female senator (white) took office only in 1922

According to Google, such results lead to a distortion of the true history of racial and gender discrimination.

This situation highlights the deep-rooted challenges that AI developers face in balancing historical authenticity and diversity representation. The incident also raises the broader question of the role of technology in shaping our perception of history and culture, raising an important debate about how artificial intelligence can and should reflect the complexity and diversity of human experience.