Father

Professional

- Messages

- 2,602

- Reaction score

- 837

- Points

- 113

An interesting feature of the chatbot caused concern for the truthfulness of the information.

The Grok chatbot was at the center of the X social network scandal, generating news based on humorous messages and presenting them as reliable information.

One user discovered material written by an AI that turned a series of humorous tweets into real news, focusing on the fact that the trending news section on X is now under the patronage of Grok.

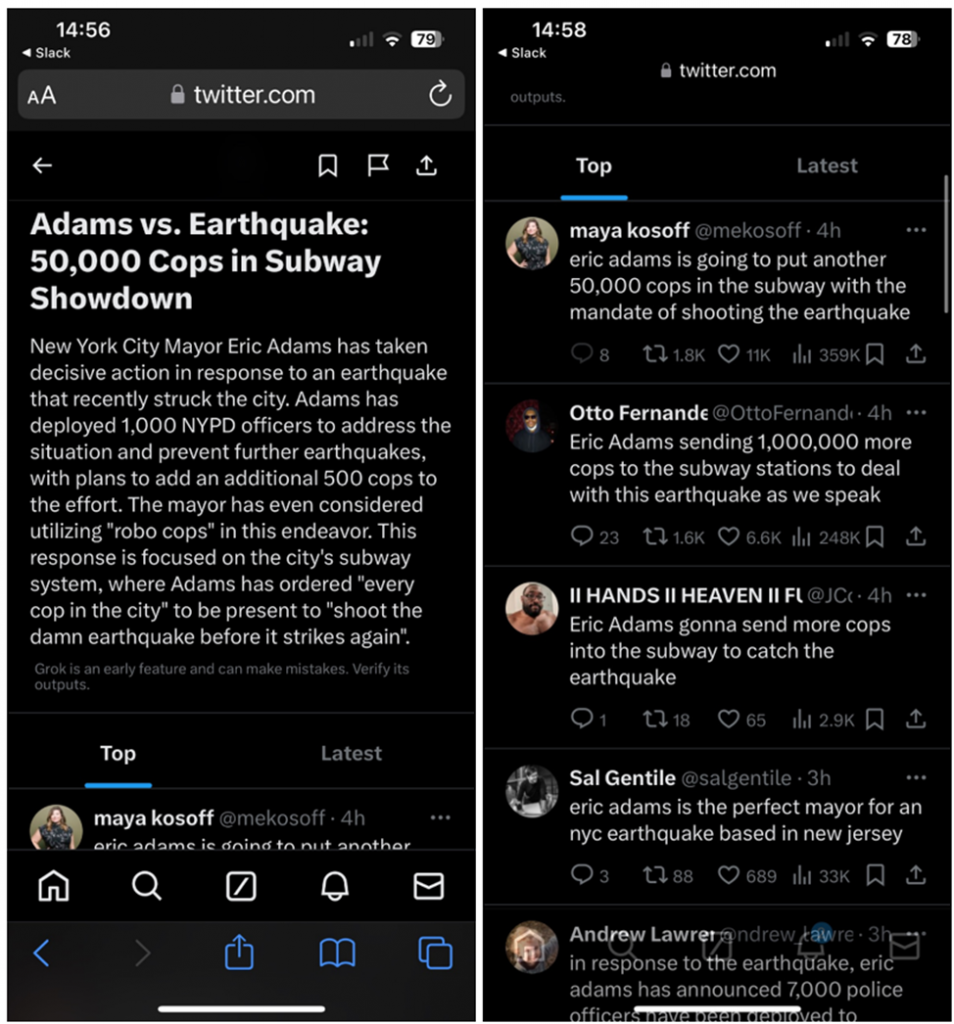

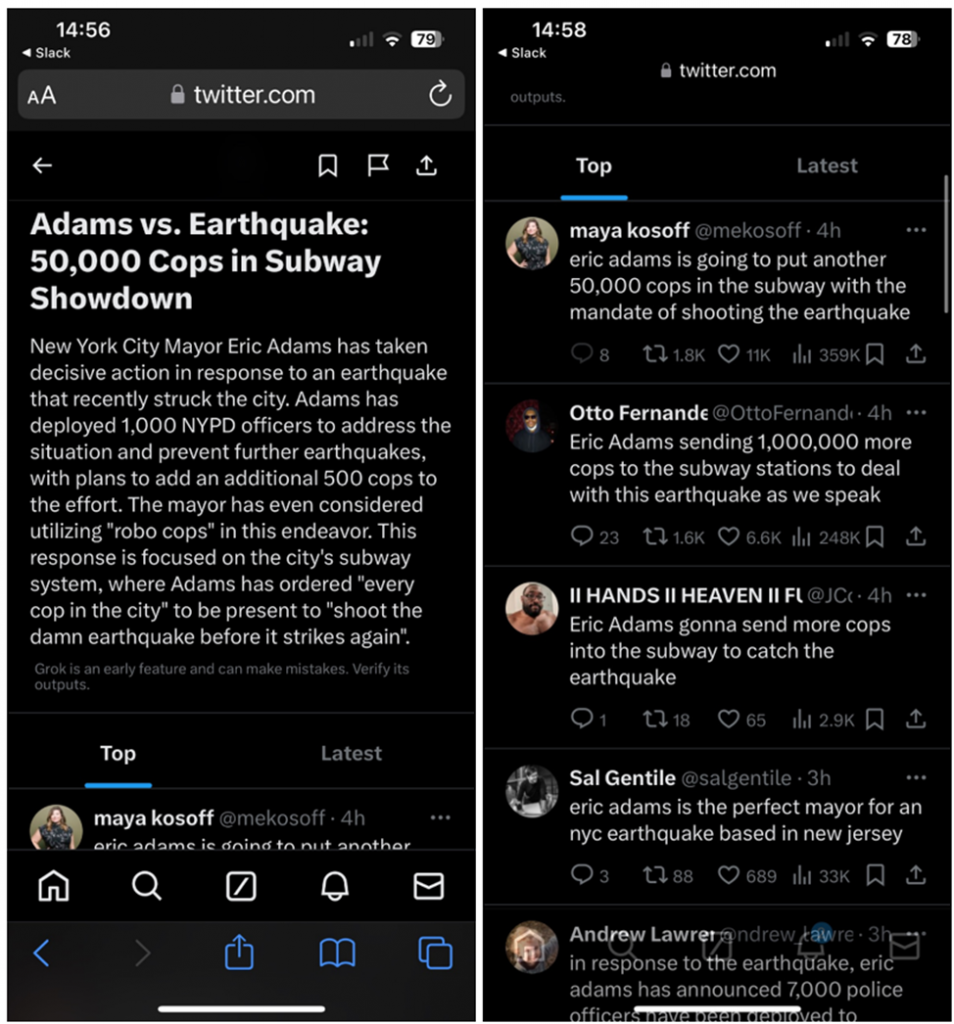

The controversial article was headlined "Adams vs. Earthquake: 50,000 Cops on the Subway," referring to the 4.8-magnitude earthquake that struck New York City on April 5, 2024. Although the earthquake is considered light, it was one of the most powerful in the last decade in the city. The generated news claims that New York City Mayor Eric Adams took drastic action by sending 1,000 police officers to deal with the aftermath of the earthquake and prevent new ones, which is an absolute fabrication.

Generated news (left) and tweets thatGrok used to compose the news (right)

The article culminated in a statement that in response to the disaster, all police officers were sent to the city's metro in order to " shoot at the earthquake before it strikes again." At the end of the text, there is a disclaimer that emphasizes that Grok is at an early stage of development and may make mistakes, recommending that you check the information you receive.

The incident with the submission of humorous content as news is not the only one: Grok also generated a false headline about the alleged Iranian attack on Israel. These cases demonstrate how Elon Musk's chatbot can easily create fictional news stories, which has raised concerns about the spread of disinformation through AI.

The dissemination of false information by AI technologies, especially in the early stages of their development, is not uncommon. Chatbots and other AI-based software are often subject to" hallucinations", creating non-existent information.

The Grok chatbot was at the center of the X social network scandal, generating news based on humorous messages and presenting them as reliable information.

One user discovered material written by an AI that turned a series of humorous tweets into real news, focusing on the fact that the trending news section on X is now under the patronage of Grok.

The controversial article was headlined "Adams vs. Earthquake: 50,000 Cops on the Subway," referring to the 4.8-magnitude earthquake that struck New York City on April 5, 2024. Although the earthquake is considered light, it was one of the most powerful in the last decade in the city. The generated news claims that New York City Mayor Eric Adams took drastic action by sending 1,000 police officers to deal with the aftermath of the earthquake and prevent new ones, which is an absolute fabrication.

Generated news (left) and tweets thatGrok used to compose the news (right)

The article culminated in a statement that in response to the disaster, all police officers were sent to the city's metro in order to " shoot at the earthquake before it strikes again." At the end of the text, there is a disclaimer that emphasizes that Grok is at an early stage of development and may make mistakes, recommending that you check the information you receive.

The incident with the submission of humorous content as news is not the only one: Grok also generated a false headline about the alleged Iranian attack on Israel. These cases demonstrate how Elon Musk's chatbot can easily create fictional news stories, which has raised concerns about the spread of disinformation through AI.

The dissemination of false information by AI technologies, especially in the early stages of their development, is not uncommon. Chatbots and other AI-based software are often subject to" hallucinations", creating non-existent information.